Key Assignment Draft As is true with all components in any computing environment, security considerations are paramount for operating systems as well. Performing risk assessments and identifying mitig

UPGRADE INFRASTRUCTURE TO MODERN DISTRIBUTED, VIRTUAL SYSTEM

Upgrade Legacy Web Infrastructure Systems to Modern Distributed, Virtual, Cloud-Native System

Table of ContentsProject Outline 4

Existing Inventory of Web Infrastructure in Use 4

Proposed Infrastructure Upgrade: 4

Figure 1 5

Upgrade Plan 5

OS Processor and Core 6

Infrastructure Upgrade Benefits 6

Steps Involved in Upgrading the Infrastructure (Processor and Core) 6

Estimating system upgrade costs 6

Due Diligence on Requirements 7

Design 7

Business Approval 7

Prepare for Migration from Legacy System to New Architecture 7

Security Considerations 7

Testing 7

Business Case for Upgrading to Virtual, Container Supported Environment 8

References 8

Scheduling Algorithms 10

Benefits of Round-Robin (RR) and Completely Fair Scheduling (CFS) Algorithm to the enterprise: 10

Challenges of Round-Robin (RR) and Completely Fair Scheduling (CFS) Algorithm to the enterprise: 11

Every Process-Scheduling algorithm often deals with conflict (e.g., throughput vs. response time). If an algorithm focuses solely on fast response time, then the throughput takes a hit. Hence both RR and CFS scheduling algorithms implement a suitable trade-off logic aiming to provide balanced performance. 11

Round-Robin (RR) Scheduling Algorithm in Virtual Environment: 11

Advantages: 12

Every process is given a fair amount of time to execute on a round-robin basis, preventing starvation. 12

Disadvantages: 12

Completely Fair Scheduling Algorithm (CFS) in Virtual Environment: 12

Advantages: 14

Challenges: 14

References: 14

OS Concurrency Mechanism 15

Benefits of Mutual Exclusion (Mutex) and Monitors Concurrency Mechanism to the Enterprise: 16

Challenges of Mutual Exclusion (Mutex) and Monitors Concurrency Mechanism to the Enterprise: 16

Some Steps to Overcome Concurrency Mechanism Challenges: 17

Mutual Exclusion (Mutex) Concurrency Mechanism: 17

Solution: 18

Monitors Concurrency Mechanism: 18

Monitor Concurrency in Java: 18

How to create a concurrent application in Java: 18

How to define and start a Thread in Java: 19

Synchronization in Java: 19

References: 20

OS Security Risks and Mitigation Strategy 21

Future Considerations Using Emerging Technology 22

Project Outline

Verizon, a wireless telecom-based service company, offers products and services via sales through the web, customer care, and retail channels. As more and more businesses are happening online, the entire web infrastructure needs a complete overhaul with an upgrade. The proposed upgrade aims to support near-term business needs that can run secure latest technology workloads and to future proof the infrastructure that is secure, scalable, resilient, redundant, performant, highly available.

Existing Inventory of Web Infrastructure in UseNumber of Physical hosts: ~2000

Number of multi-processors, multi-core hosts (2+ physical CPUs & 8+ cores): 1200

Number of single-processor, multi-core hosts (1 physical CPU & 8+ cores): 800

Operating System: Linux

Distribution:

Red Hat Enterprise Linux (RHEL) versions ranging from 4.x to 7.x

Oracle Linux versions ranging from 4.x to 5.x

Kernel versions: ranges from 2.x to 3.x

Server Form Type: Mix of Tower, Rack-based servers

Proposed Infrastructure Upgrade:Number of Physical hosts: ~2000

Processor: multi-processor, multi-core hosts (2+ physical CPUs & 32+ cores)

CPU Socket or Slot: 2-4

Operating System: Linux, amd64 architecture

Distribution: Red Hat Enterprise Linux 8.x, Ubuntu Enterprise Linux 20.x LTS

Kernel version: 5.x-generic

VMware vsphere & ESXi (Type 1) Hypervisor

Server Form Type: Blade servers

Kubernetes version: v1.20 running a standard cluster of 3 master HA nodes and 24 worker nodes. (1 node refers to 1 VM)

Docker runtime: v1.18

Infrastructure Design to be implemented: (Ref. Figure 1) All new infrastructure will be virtualized using VMware vsphere & ESXi Hypervisors. 50% of the upgraded system upgraded to Container environment design utilizing Kubernetes Orchestration Engine and Docker runtime providing an abstraction over Operating System.

Figure 1Legend:

App – Software applications

Libs – Dependency Libraries

OS – Operating System

VM – Virtual Machines(s)

Underlying physical servers with multi-processor, multi-core system running latest host Operating System Linux RHEL 8.x or Ubuntu Enterprise Linux 20.x LTS

VM

OS Processor and CoreInfrastructure Upgrade Benefits

A lot of physical server resources are underutilized as the servers are dedicated to run the specific application. As a result, the power of computing resources is not fully realized, resulting in wastage of resources and high costs. Moreover, with many legacy single-processor systems, the demands of modern business innovations are not met, which requires parallel processing running multi-processor, multi-core systems.

Virtualization of physical servers is a process of abstraction over physical servers turning a single physical server into multiple virtual machines that enable different operating systems to run alongside each other, efficiently sharing the underlying hardware compute resources. A hypervisor is a piece of software that makes virtualization possible.

To implement virtualization and fully reap its benefits, the hardware needs to upgrade running multi-processor, multi-core operating system. Some of the significant benefits of upgrading to a multi-processor, multi-core system to achieve virtualization are:

Higher capacity utilization meaning less wastage of compute resources resulting in lower costs.

Multi-processor, multi-core system-based Virtual Machines (VMs) lets the enterprise effectively run more than one instance of an application distributed across multiple VMs, regions, data centers resulting in high availability of applications.

Ability to recover fast (resilient) in failure recovery situations by spinning up an entirely new VM running a new instance of the same application without downtime.

With a multi-core system, several cores of CPU get virtually assigned to each VM. Depending on the business application needs, VMs can be destroyed and recreated, allowing to scale up or down efficiently utilizing the multi-core system architecture.

Multi-processor, multi-core system combined with virtualization technology caters to the demanding needs of the business to let diverse applications requiring different OS to run in parallel on a single physical server.

Due to the distributed architecture, OS upgrades and security patches for any vulnerability are easy without downtime, making the system robust and secure. However, the legacy physical system involves a lot of time, effort, cost, and downtime due to its monolithic nature.

Use specific methods and tools such as Construction Cost Model (COCOMO), Function Point Analysis (FPA), and The Putnam Model to estimate budget and upgrade system resources to support current operations and prepare for replacing old systems.

Due Diligence on RequirementsTake stock of the current inventory and identify servers that need a rebuild (upgrade) and replace servers.

Audit systems to determine their business value, criticality, and where there are opportunities to modernize.

Perform due diligence on hardware compatibility while upgrading to a multi-processor, multi-core system. Consider upgrading old tower-based servers to efficient rack, blades-based servers.

A thorough architecture design review to ensure the new design would support any future system growth in an iterative fashion and not having to replace the system completely after few years.

Perform risk analysis weighing possible disruption to business while also considering the risk of keeping old systems as-is, including maintenance for outdated systems.

Test old systems' performance aimed at uncovering further potential issues. The lousy performance or significant security risks can be a reason for a complete replacement or selective upgrades (or rebuild) of systems.

Plan for training engineers in modern DevOps processes and upskill them to operate the upgraded system. Estimate and include the cost involved in this training effort.

Get a buy-in from the management going over design architecture, risks, total estimated cost.

Perform migration of non-critical applications first to upgraded multi-processor, multi-core based virtual

system with latest Linux operating system.

Backup up application and system data of existing legacy system

Migrate application data to the new virtual systems

Prepare a fail-over and roll-back plan if any failures during migration to cut over to the old system.

Post-migration, run a thorough application performance test and review test results before taking a call to move critical applications.

Plan ways to protect systems before, during, and after upgrade to avoid data loss, outages, or exposure and ensure adherence to the new environment's governmental and industry compliance regulations.

Build automation pipeline to upgrade Operating System at regular cadence (probably every quarter) and prepare a solid plan for patching infrastructure when Common Vulnerability Exposures (CVEs) are identified.

TestingPerform post-upgrade tests comparing virtualization benefits such as resiliency, availability, scalability, application compatibility, maintenance, and support. Reap the profits of virtualization.

Business Case for Upgrading to Virtual, Container Supported Environment

In a competitive Telcom industry, Verizon has a chance to outperform the competitors by just offering a modern, robust, lightweight solution to its customers.

Verizon has already kick-started the digital transformation journey, replacing monolithic applications with loosely coupled microservices architecture, requiring the underlying infrastructure to be virtualized to support this journey.

The upgrade intends to meet the current needs of business and meet the demands and trends of future technology advances. This infrastructure upgrade makes Verizon future business ready.

Today in the digital world, security breaches cost companies billions of dollars. Unfortunately, legacy systems are less resistant to cyberattacks which is one of the key things to move towards an agile virtual environment where maintenance and support are more effortless.

Identify legacy applications that are not compatible with an upgraded virtual system, plan for business continuity of these legacy systems and applications. At the same time, prepare to build new applications with microservices architecture that can eventually phase out those old applications.

The virtualization benefits of high performance, scalability, availability, resiliency, security, manifold increases business and revenue compared to cost spent on system upgrade.

Firesmith, D., (2017, August 14). Multi-core and Virtualization: An Introduction [Blog post]. Retrieved from http://insights.sei.cmu.edu/blog/multicore-and-virtualization-an-introduction/

Virtual Servers and Platform as a Service. (2014, January 1). ScienceDirect. https://www.sciencedirect.com/science/article/pii/B9780124170407000058?via%3Dihub

5 Benefits of Virtualization. (2021, April 23). IBM. https://www.ibm.com/cloud/blog/5-benefits-of-virtualization

Sawant, V. (2020a, December 28). A brief guide to legacy system modernization. Rackspace. https://www.rackspace.com/blog/brief-guide-legacy-system-modernization

Kaneda, Kenji & Oyama, Yoshihiro & Yonezawa, Akinori. (2008). Virtualizing a Multiprocessor Machine on a Network of Computers

Editor. (2020, February 27). Legacy system modernization: How to transform the enterprise for digital future. AltexSoft. Retrieved September 22, 2021, from https://www.altexsoft.com/whitepapers/legacy-system-modernization-how-to-transform-the-enterprise-for-digital-future/.

Scheduling Algorithms

Based on the proposal outlined in the previous section the environment chosen for upgrade is Virtual Environment. The previous section went over some major benefits and presented a strong business case of upgrading to a virtual environment. Verizon has already kick-started the digital transformation journey to deliver an innovative customer experience that not only meet the needs of today’s business but can also handle future growth outperforming the competitors.

To support the infrastructure upgrade to a dynamic Virtual environment that runs latest versions of Linux (Red Hat Enterprise Linux 8.x, Ubuntu Enterprise Linux 20.x LTS) and kernel (5.x) it is important to choose a right Scheduling Algorithm that is compatible and capable of delivering the full benefits of virtualization. The scheduler is the algorithm run by the Operating System that selects one process and assigns compute resources for execution from several available runnable processes that request execution simultaneously and asynchronously. Thus, scheduling is the act of choosing the next process.

Choosing a suitable scheduling algorithm is an essential step in the process of virtualization as it impacts business. For example, customers ordering phones and adding phone lines interacting with web applications should experience faster response time. At the same time, all order requests should be fulfilled that come from multiple different users from all parts of the country simultaneously.

The scheduling algorithm proposed as part of Verizon enterprise Web infrastructure upgrade to the modern, distributed virtual systems are Round-Robin (RR) Scheduling Algorithm and Completely Fair Scheduling (CFS) Algorithm.

Benefits of Round-Robin (RR) and Completely Fair Scheduling (CFS) Algorithm to the enterprise:Both RR and CFS algorithm ensures fairness as every process gets an equal share of CPU and gets equally distributed across multiple CPUs and cores which means every user request arriving at the same time gets executed in parallel on multi-process resulting in improved performance.

Both RR and CFS, the avg wait time of the process is low compared to other algorithms as CPU burst times vary, contributing to improved performance overall.

CFS doesn't use process priorities to decide the absolute time slice (or quantum) a process gets on a processor, instead, it gets calculated based on the overall load of the system resulting in faster response time most of the time.

In CFS algorithm, every process has two priorities Nice Value (priority for interactive user processes) and real-time priority (Ref for more details). This elegantly handles both I/O(user-interactive) and CPU-bound processes resulting in higher throughput.

Completely Fair Scheduler (CFS) has been accepted as the default process-scheduling algorithm in Linux OS since 2007, running 2.6.23 kernel. It has gone through multiple iterations of evaluations before being accepted as the default scheduling algorithm. Since the proposed upgrade infrastructure will be virtual environment running Linux OS, it only makes sense to utilize this tried and tested algorithm for the enterprise to minimize risks.

CFS supports and extends other classic scheduling such as SCHED_FIFO and SCHED_RR. This combined feature allows the operating system to run on a multi-processor, multi-core system to realize the combined benefits that lead to an efficient system. An efficient system directly maximizes the growth of a business.

The CFS algorithm supports Symmetric Multiprocessing (SMP) so it is capable of evenly scheduling the processes between multiple CPUs in the system utilizing the proposed multi-processor, multi-core system.

The design of CFS is innovative and extensible in that it allows new schedulers to be integrated. So, any innovation the business might undertake in the future should that requires using any latest trending scheduler could very well be integrated with CFS by simply registering the new scheduler to the generic/core scheduler.

Group scheduling is another feature that CFS supports. While handling user-facing web application requests handling them promptly matters. A lot of web apps like Nginx have main process and several child(worker) processes, and CFS treat these sorts of processes as a group rather than as individuals for scheduling purposes. This results in a quicker response for the end-users.

When a Guest OS process-scheduling algorithm has to deal with many processes, it results in performance degradation of that VM. In addition, this could become a noisy neighbor for other VMs running on the same physical host.

Can a Guest OS scheduling algorithm on VM use idle resources on the underlying physical host when it exceeds its entitled resource limits is still a challenge?

- Every Process-Scheduling algorithm often deals with conflict (e.g., throughput vs. response time). If an algorithm focuses solely on fast response time, then the throughput takes a hit. Hence both RR and CFS scheduling algorithms implement a suitable trade-off logic aiming to provide balanced performance.

CFS load balancing solution is still evolving in multi-processor, multi-core virtual environments, this could lead to some CPU cores being underutilized. This leads to wastage of resource to an extent and adds to cost.

Both RR and CFS have frequent CPU switching, which leads to a considerable decrease in CPU efficiency.

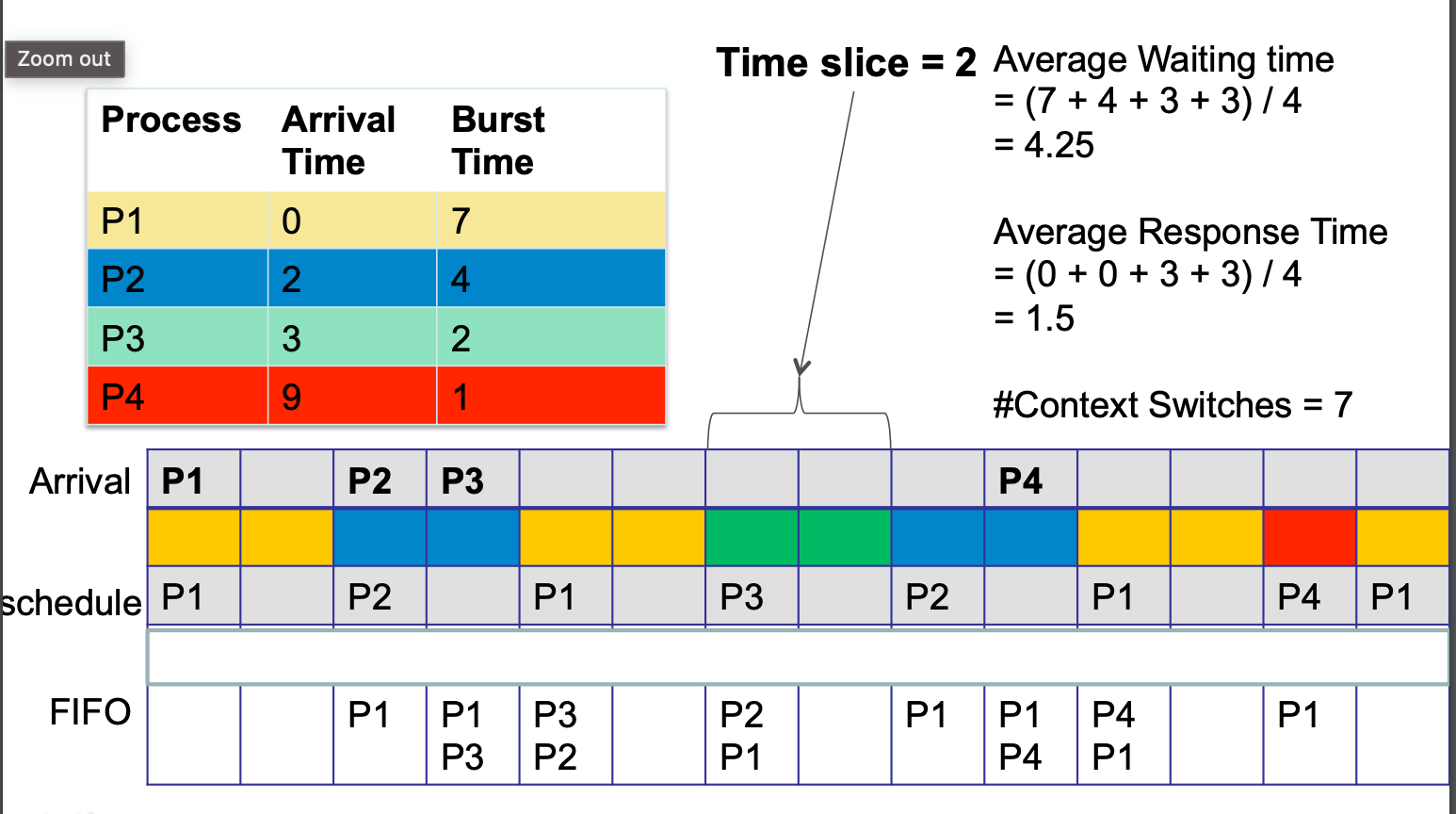

Round-Robin Scheduling Algorithm is one of the preemptive type scheduling algorithms. For execution, each process is allotted with a fixed time, called time slice (or quantum).

Once a process is executed for the given time, the scheduler preempts that process, moves it to the tail of the ready queue (FIFO), and the next process executes for the given time.

The length of the time slice ranges from 10-100ms. It uses array-based queues, and context switching is used to save the states of preempted processes.

In a Virtual Environment, both Host OS and Guest OS can implement Round-Robin (RR) Scheduling algorithm. The Host OS schedules and run multiple VM processes distributed across multiple different processors. Each VM runs Guest OS with its RR scheduling algorithm distributing its process in the ready queue on RR fashion utilizing multi-cores of a CPU.

There is fairness since every process gets an equal share of CPU. Moreover, in a Virtual Multi-processor environment, RR scheduling algorithm helps evenly distribute the process across different processors, concurrently improving efficiency.

Low avg wait time, when burst times vary resulting in faster response time.

- Every process is given a fair amount of time to execute on a round-robin basis, preventing starvation.

The throughput is low if quantum is shorter as frequent CPU switching occurs, leading to decreased CPU efficiency.

High average wait time, when burst times have equal lengths.

Completely Fair Scheduler (CFS) has been the default process-scheduling algorithm in Linux OS since 2007, running 2.6.23 kernel. Since Linux is our proposed OS of choice for the Virtual Environment running Kernel 5.x-generic, CFS scheduler will be our preferred process scheduling algorithm.

The CFS aims to be fair to every runnable task in the system by equally distributing CPU resources.

According to Ingo Molnar, author of CFS states: "CFS models an 'ideal and accurate multi-tasking CPU' on real hardware."

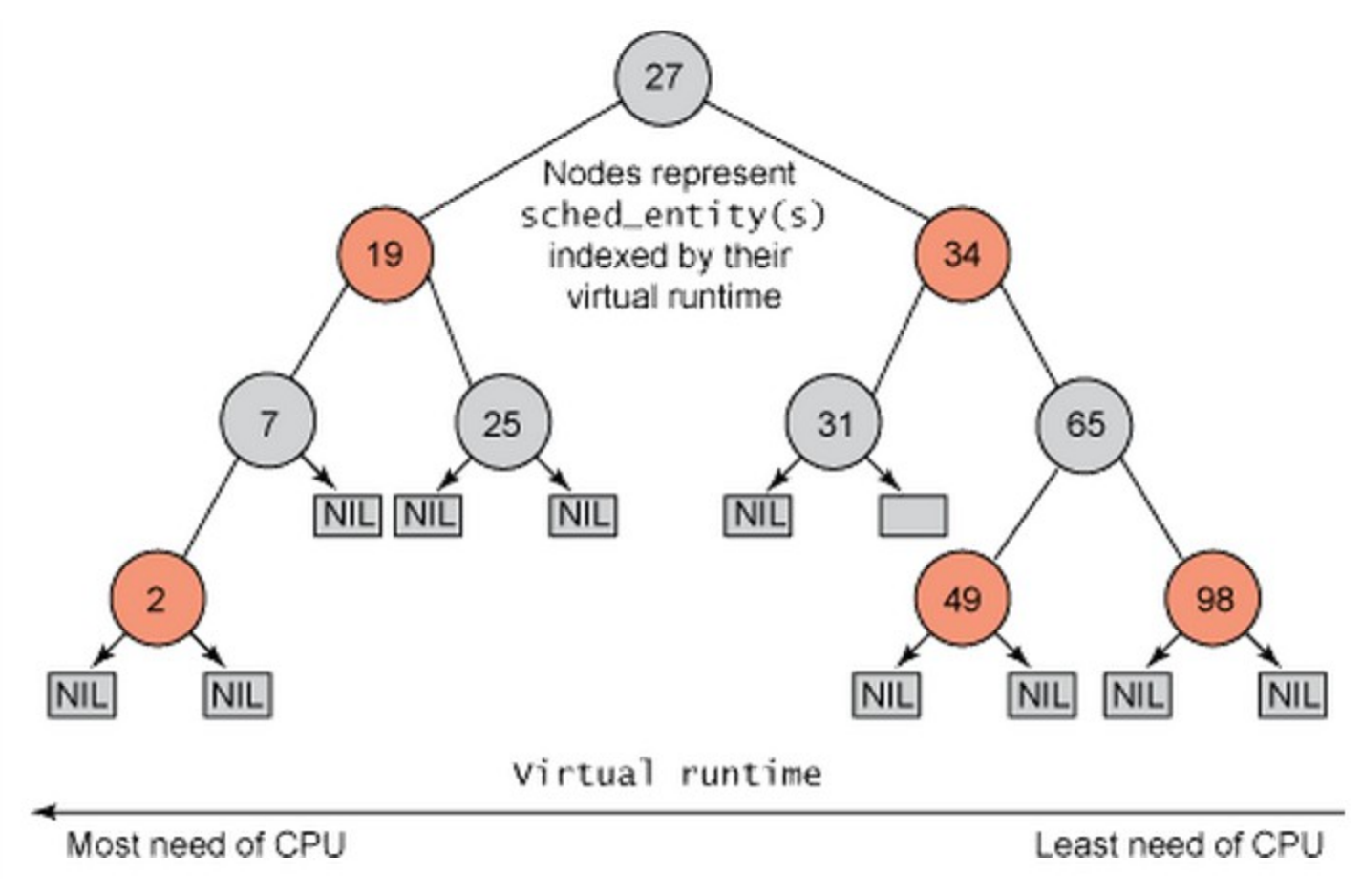

CFS implements fairness by calculating vRuntime (virtual CPU Time), the amount of time a process has spent on the processor. The process with low runtime deserves most to run; hence CFS scheduler picks that process next to run.

This logic is implemented using a node-based Red-Black Tree (RBTree) data structure. A process that spent less time on the processor is stored on the leftmost side of the RBTree. By this logic, the CFS algorithm builds a timeline of future process execution, and the scheduler always picks up the leftmost node (or process) as the next one to run.

With CFS, every process has two priorities nice value and real-time priority. Nice value is user-space values that is used to control the priority of a process. It ranges from -20 to +19, where -20 is highest, 0 is default, and +19 is lowest. Lower the nice value, higher the priority. If two processes are having a nice value as 10 (process 1) and 5 (process 2), process 2 has high priority. Second priority is real-time priority, the value ranges from 0 to 100., higher the value, higher the priority of the process.

CFS design supports modular, extensible schedulers. It comprises of:

Generic core scheduler (kernel/sched.c) - implements default scheduling policy,

CFS specific scheduler (kernel/sched_fair.c) - implements SCHED_NORMAL, SCHED_BATCH and SCHED_IDLE policies

Real-time (RT) specific scheduler (kernel/sched_rt.c) - implements classic Round-Robin (SCHED_RR) and First In First Out (SCHED_FIFO) policies with some slight changes.

The generic (core) scheduler invokes an appropriate scheduler (CFS or Real-time scheduler) based on the scheduling policy of the process. In case of a normal process, CFS scheduler is invoked, and in the case of a real-time process, the RT scheduler is invoked. Like any other scheduler, the RT process has high priority over the normal process.

Advantages:One of the significant changes with CFS is that it uses a node-based Red-Black Tree (RBTree) data structure over other schedulers that use array queues. Hence it doesn't have to deal with the problem of Array Switch between Active and Expired Arrays.

CFS maintains dynamic time-slices, unlike other algorithms which is based on fixed time-slices making the process scheduling and execution efficient.

The design of CFS is innovative and flexible in that it allows new schedulers to be integrated. So any future needs can also be met by simply registering the new scheduler to the generic/core scheduler making this one of the robust scheduling algorithms.

The average wait time of the process is low as CPU burst times vary.

CFS provides better response time (low latency) as compared to other algorithms.

CFS is constantly calculating vruntime which could add to overhead

CFS on multi-core systems needs to do a better job at CPU context switching.

CFS load balancing solution is evolving, and this leads to some CPU cores not being utilized.

Kalin Feed, M. (2019). CFS: Completely fair process scheduling in Linux. Opensource.Com. https://opensource.com/article/19/2/fair-scheduling-linux

Process/thread scheduling¶. Process/Thread Scheduling - Operating Systems Updated 2021-07-20 documentation. (n.d.). Retrieved September 24, 2021, from https://os.cs.luc.edu/scheduling.html.

Completely Fair Scheduler | Linux Journal. (2009). Linux Journal. https://www.linuxjournal.com/node/10267

Garg, R., & Verma, G. (2017). Operating Systems [OP]: An Introduction. Mercury Learning & Information.

OS Concurrency MechanismBased on the proposal outlined in the previous section Project Outline, the environment chosen for upgrade is Virtual Distributed Computing Environment (i.e., a private cloud computing environment spread across multiple data centers. DCE (Distributed Computing Environment) is a software technology used to setup and manage tasks, computing, data transfer on multiple distributed computers simultaneously. DCE manages a network of systems with varying sizes spread across various locations or geography. DCE is based on the client-server model, where client user requests will be served from any distributed server system. To support the infrastructure upgrade to a Virtual Distributed Computing environment it is not only important to choose the right Operating system, scheduling algorithm but also to choose the right concurrency mechanism for communication and synchronization of processes that are running simultaneously spread across distributed systems.

Concurrency is a collection of techniques and mechanisms relating to a program that processes multiple different tasks at the same time intending to lower response time.

Concurrency arises in three different contexts:

- Multiprocessing: this is at the hardware level involving multiple processors (multiple CPUs)

- Multitasking: OS manages multiple different tasks (via scheduling algorithms)

- Multithreading: Application program level involving multiple threads (example via concurrency framework in Golang, Java)

The Concurrency Control mechanism proposed as part of Verizon enterprise Web infrastructure upgrade to the modern, distributed virtual systems are Mutual Exclusion (Mutex) and Monitors.

Benefits of Mutual Exclusion (Mutex) and Monitors Concurrency Mechanism to the Enterprise:Mutex obtains a simple lock before entering the code's critical section and releasing the lock post critical section execution. This allows multiple processes to run simultaneously, providing all the benefits of concurrency such as scalability, availability and safely enter the critical section of code execution only when needed, one process at a time.

Data integrity (i.e., no corruption in data) is of prime importance to any business; with both Mutex and Monitors concurrency control mechanism, there are no race conditions, and data integrity is stable and consistent.

Both Mutex and Monitors concurrency mechanism leads to better utilization of physical infrastructure, allowing multiple processes to run simultaneously utilizing multiple CPUs, multi-cores effectively.

Both Mutex and Monitors concurrency mechanisms improve the overall response time of requests. In addition, the mechanisms pave the way to run multiple processes simultaneously and provides the means to access shared resources effectively without compromising data.

Monitors concurrency mechanism provides a better user experience in terms of responsiveness. For example, if a user clicks on a button in Verizon's Web GUI and this results in a request sent to the backend service, in a single-threaded application, only one Thread would be doing all the heavy lifting of rendering the new UI content as well as get the response from the backend service. The user, at this time, will see a page with a busy icon until both these activities are complete. Instead, with the Monitor concurrency mechanism, multiple threads are spawned. One Thread renders the new page content, and another thread gets the response from the backend and updates the part of the page as necessary. This provides a better user experience allowing users to interact with the web application faster without having to wait.

Both Mutex and Monitors ensure fairness resulting in a better user experience. In a virtual distributed system, multiple requests are sent to each instance of the application simultaneously. In a single-threaded application, all these requests will wait for the first request to be completed before the next one is processed. In a multi-threaded application, the monitor concurrency mechanism establishes control over multiple threads handling multiple requests simultaneously.

Both Mutex and Monitors concurrency provides improved throughput and resource utilization.

Distributed Computing environment doesn’t have a global clock hence pose synchronization process challenges. It requires careful programming with effective concurrency control to avoid data corruption and transmission delay errors.

The concurrency control mechanism in DCE is complex to design, maintain and understand. This results in increased complexity.

To achieve concurrency, programs are implemented with non-atomic functions in that the sequence of instruction execution is not guaranteed. Other processes tend to know the current process's intermediate state and interrupt the operation, leading to higher wait time, higher response time, and low throughput if the concurrency is not well implemented.

The main challenge of the concurrency mechanism is the shared variable/resource. To overcome the challenges and take full advantage of Mutex and Monitors concurrency, in the digital transformation journey of Verizon, it is essential to decouple and rebuild monolithic stateful applications into cloud-native stateless microservices. This involves additional cost and effort having to rebuild many of the legacy applications to support this architecture.

Improper or excessive use of locks can lead to degraded performance of critical business applications.

When two or more processes keep changing their state continuously in response to changes in other processes without executing any of the core tasks can lead to Livelock problem as the process is only on a constant state changing mode doing no other useful work. This results in a longer wait time and impacts response time for the end-users interacting with those business applications.

Sometimes Deadlock situation can arise when two or more processes are hung waiting for the other process to finish. This will result in business application user requests timeout and eventually erroring out, directly impacting business.

As part of the digital transformation journey to maximize the benefits of upgrading to a virtual distributed computing system, monolithic applications need to be redesigned and decoupled into cloud-native multiple stateless microservices implementing effective concurrency mechanisms to access persisted data.

While using lock technique, reduce the scope of the lock to just a tiny critical section of code instead of the whole function.

Avoid lock on nested code loop.

Implement retry logic in code to access critical section and gracefully handle errors without causing the process to crash.

Migrate legacy applications built on old programming languages that don't support concurrency to modern programming languages such as Golang, which offers an easy-to-implement concurrency mechanism.

Mutual exclusion is a concurrency control property provided by the Operating System (OS) kernel wherein a process cannot enter its critical section while another process is currently executing its critical section i.e., only one process is allowed to execute the critical section at any given time.

Problem: Implementing mutual exclusion in a Virtual Distributed Computing Environment with no shared memory is tricky as the system does not have complete information of the state of the other system process.

Aim: Mutex mechanism aims to have No Deadlocks, No Race Conditions, No Starvation, Fairness, Fault Tolerance.

Solution:Message Passing is the solution to implement Mutex in distributed systems. Some of the approaches are:

1. Token Based Algorithm:

A unique token is issued to all processes. This approach uses the sequence number of the issued token to allow access to executing the critical section. In addition, this unique token with the sequence number is used to distinguish between old and current requests.

2. Timestamp based approach:

This approach makes use of a unique timestamp when a process requests the execution of a critical section. The uniqueness of timestamp is used to resolve any conflict between multiple critical section requests.

3. Quorum based approach:

Maekawa's Algorithm is the first quorum-based mutex algorithm. It doesn't request permission from all the processes but only a subset of processes called quorum. Three types of messages (REQUEST, REPLY and RELEASE) are used by the process to request and gain access to critical section execution.

Monitors Concurrency Mechanism:The monitor is a lightweight synchronization construct designed for multi-threaded synchronization within the same process provided by the framework of a programming language rather than the OS. This mechanism allows threads to have mutual exclusion and cooperation, which makes threads wait for specific conditions to be met using x.wait() and x.signal() constructs.

Monitors make concurrency easier to implement, less prone to errors than using semaphore. However, on the flip side, it causes overhead on the compiler, which generates code to control access to critical section within a program dealing with multiple threads. C#, Java, ADA, Golang are some of the languages that support monitors.

Monitor Concurrency in Java:Let us look at how the monitor concurrency mechanism is implemented in Java. In Java, synchronization is built on internal java objects known as intrinsic lock or monitor lock. Each object in Java is associated with a monitor, which a process thread can acquire a lock or unlock. Only one Thread can hold a lock on a critical section of code at a time. Any other threads are blocked attempting to acquire lock until the other Thread release the lock on the critical section.

How to create a concurrent application in Java:To create a concurrent application, there are two basic strategies using Thread objects in Java.

1. instantiate a thread each time an application needs to invoke an async task.

2. pass the application tasks to an executor to abstract thread management from the rest of the application.

How to define and start a Thread in Java:There are two ways to do this:

Provide a Runnable object. The Runnable interface defines a single method, run, meant to contain the code executed in the Thread. The Runnable object is passed to the Thread constructor, as in the SampleRunnable example:

public class SampleRunnable implements Runnable {

public void run() {

System.out.println("Sample Hello World from a thread!!");

}

public static void main(String args[]) {

(new Thread(new SampleRunnable())).start();

}

Subclass Thread. The Thread class itself implements Runnable. An application can subclass Thread, providing its own implementation of run, as in the SampleThread example:

public class SampleThread extends Thread {

public void run() {

System.out.println("Sample Hello World from a thread!!");

}

public static void main(String args[]) {

(new SampleThread()).start();

}

Synchronization in Java:Multiple threads try to access shared resources at the same time and are prone to two kinds of errors, i.e., memory consistency and thread interference errors. The tool to prevent these sorts of errors is called synchronization.

The Java language provides two basic synchronization options: synchronized methods and synchronized statements.

To implement synchronized methods, add the synchronized keyword to its declaration:

public class SampleSynchronizedCounter {

private int x = 0;

public synchronized void incrementCounter() {

x++;

}

public synchronized void decrementCounter() {

x--;

}

public synchronized int value() {

return x;

}

To implement synchronized statements, add the synchronized keyword to a block of Java code statements instead of the whole method to acquire a monitor lock.

public void sampleAddName(String name) {

synchronized(this) {

lastName = name;

nameCount++;

}

nameList.add(name);

Synchronized statements are beneficial for improving concurrency since we are limiting the critical section to only a few statements of code as opposed to the synchronized methods which covers the whole method.

Latest Java versions support concurrency via Java libraries APIs found in Java.util.Concurrent package, which offers advance concurrency mechanism options.

References:Concurrency - Synchronization. (2020, April 8). Datacadamia - Data and Co. https://datacadamia.com/data/concurrency/synchronization

Concepts: Concurrency. (2001). Rational Software Corporation. https://sceweb.uhcl.edu/helm/RationalUnifiedProcess/process/workflow/ana_desi/co_cncry.htm

Stallings, W. (2017). Operating Systems (9th Edition). Pearson Education (US). https://coloradotech.vitalsource.com/books/9780134700113

What Are Distributed Systems? An Introduction. (2021). Splunk. https://www.splunk.com/en_us/data-insider/what-are-distributed-systems.html

OS Security Risks and Mitigation Strategy

TBD

Future Considerations Using Emerging TechnologyTBD