Sampling and data collection in research paper

| Sampling and Data Collection in Research Paper BSHS/435 Version 1 | 104 |

CHAPTER5

A crisis counselor working with a mental health agency receives a call from the county jail. The deputy there is concerned about an inmate he describes as severely depressed. The counselor responds by asking a number of questions, attempting to make an initial assessment of the severity of the inmate’s depression. Has the inmate been eating his meals? Is he sleeping too much or too little? Is his affect flat when he responds to questions? Has he made any remarks about committing suicide? Later, the counselor may interview the inmate directly, request psychological testing, or refer him to a psychiatrist for further evaluation. Such assessments are analogous to a process in research called measurement. Just as the clinician used a variety of observations by the deputy as indicators of the inmate’s condition, researchers use various observations as indicators of the concepts of interest in a research project. Measurement refers to the process of describing abstract concepts in terms of specific indicators by assigning numbers or other symbols to these indicants in accordance with rules. At the very minimum, one must have some means of determining whether a variable is either present or absent, just as the counselor needs to know whether the inmate is eating or not. In many cases, however, measurement is more complex and involves assessing how much, or to what degree, a variable is present. An example of this is the counselor’s question about how much the inmate is sleeping, “amount of sleep” being a variable that can take on many values.

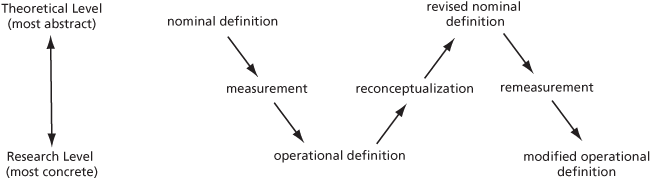

Measurement is a part of the process of moving from the abstract or theoretical level to the concrete. Recall from Chapter 2 that scientific concepts have two types of definitions: nominal and operational. Before research can proceed, researchers must translate nominal definitions into operational ones. The operational definitions indicate the exact procedures, or operations, that the researchers will use to measure the concepts. Measurement is essentially the process of operationalizing concepts. Figure 5.1 illustrates the place of measurement in the research process.

Figure 5.1 The Measurement Process

In this chapter, we discuss the general issues that relate to all measurement, beginning with some of the different ways in which we can make measurements. We then analyze how measurements that are made at different levels affect the mathematical operations that can be performed on them. Finally, we present ways of evaluating measures and determining the errors that can occur in the measurement process.

Ways of Measuring From Concepts to IndicatorsNormally, we cannot observe directly the concepts and variables that are the focus of both research and practice. We cannot see such things as poverty, social class, mental retardation, and the like; we can only infer them from something else. Take something as seemingly obvious as child abuse. Can you directly observe child abuse? Not really. What you directly observe is a bruise on a child’s back, an infant’s broken leg, or a father slapping his daughter. And even the slap may not relate to child abuse, because parents sometimes slap their children without its being a case of child abuse. However, all these things—the bruise, the broken leg, the slap—may be used as indicators of child abuse. In research and in practice, an indicator is an observation that we assume is evidence of the attributes or properties of some phenomenon. What we observe are the indicators of a variable, not the actual properties of the variable itself. Emergency room personnel may assume that a child’s broken leg is an indicator of child abuse even though they have not observed the actual abuse.

Child abuse represents a good illustration of the difficulties of moving from nominal to operational definitions with variables involving social and psychological events. At the nominal level, we might define child abuse as an occurrence in which a parent or caretaker injures a child not by accident but in anger or with deliberate intent (Gelles 1987; Korbin 1987). What indicators, however, would we use to operationalize this definition? Some things would obviously seem to indicate child abuse, such as a cigarette burn on a child’s buttock, but what about a bruise on the arm? Some subcultures in our own society view hitting children, even to the point of bruising, as an appropriate way to train or discipline them. Furthermore, some people would argue that a serious psychological disorder a child suffers is an indicator of child abuse, because it shows the parents did not provide the proper love and affection for stable development. In short, one of the problems in operationalizing child abuse, as with many other variables in human service research, is that its definition is culture-bound and involves subjective judgments. This illustrates the importance of good conceptual development and precise nominal definitions for research. It also shows how the theoretical and research levels can mutually influence one another: As we shape nominal definitions into operational ones, the difficulties that arise often lead to a reconceptualization, or a change, in the nominal definition at the theoretical level (see Figure 5.1).

The example of child abuse also illustrates another point about measurement—namely, that more than one indicator of a variable may exist. The term item is used to refer to a single indicator of a variable. Items can take numerous forms, such as an answer to a question or an observation of a behavior or characteristic. Asking a person her age or noting her sex, for example, would both produce items of measurement. In many cases, however, the process of operationalizing variables involves combining a number of items into a composite score called an index or a scale. (Although scales involve more rigor in their construction than indexes do, we can use the terms interchangeably at this point; Chapter 13 will present some distinctions between them.) Attitude scales, for example, commonly involve asking people a series of questions, or items, and then summarizing their responses into a single score that represents their attitude on an issue. A major reason for using scales or indexes rather than single items is that scales enable us to measure variables in a more precise and, usually, more accurate fashion. To illustrate the value of scales over items, consider your grade in this course. In all likelihood, your final grade will be an index, or a composite score, of your answers to many questions on many tests throughout the semester. Would you prefer that your final grade be determined by a one-item measure, such as a single multiple-choice or essay question? Probably not, because that item would not measure the full range of what you learned. Furthermore, an error on that item would indicate that you had not learned much in the course, even if the error were the result of ill health or personal problems on the day of the exam. For these reasons, then, researchers usually prefer multiple-item measures to single-item indicators.

We began this discussion by noting that because variables are abstract, we normally cannot observe them directly. Variables differ in their degree of abstraction, however, and this affects the ease with which we can accomplish measurement. In general, the more abstract the variable, the more difficult it is to measure. For example, a study of child abuse might include the variable “number of children in family,” on the theoretical presumption that large families create more stress for parents and, therefore, are more likely to precipitate abusive attacks on children. This is a rather easy variable to measure, because the concepts of “children” and “family” have readily identifiable, empirical referents and are relatively easy and unambiguous to observe and count. Suppose, however, that the child abuse study also included as a dependent variable “positiveness of child’s self-concept.” Because it can take many different forms, “self-concept” is a difficult notion to measure. Although we have narrowed it to the “positive—negative” dimension, it is still more difficult to measure than “number of children in family,” because we could ask a whole variety of questions to explore how positively people feel about themselves. We also can measure self-concept by behaviors, on the theoretical presumption that people who feel positively about themselves behave differently from those who do not. The point is that highly abstract concepts usually have no single empirical indicator that is clearly and obviously preferable to others as a measure of the concept.

We have emphasized the point that measurement involves transition from the abstract and conceptual level to the concrete and observable level, and this is what most typically occurs in research. Exploratory studies, however, can involve measurement in the opposite direction: First, we observe empirical indicators and then formulate theoretical concepts that those indicators presumably represent. In Chapter 2, we called this inductive reasoning. In a sense, you might think of Sigmund Freud or Jean Piaget as having done this when they developed their theories of personality and cognitive development, respectively. Piaget, for example, observed the behavior of children for many years as he gradually developed his theory about the stages of cognitive development, including concepts like egocentrism, object permanence, and reversibility (Ginsburg and Opper 1988). Piaget recognized that what he observed could be understood only if placed in a more abstract, theoretical context. In a sense, he measured something before he knew what it was he had measured. Once his theories began to develop, he then developed new concepts and hypotheses, and he formulated different measuring devices to test them deductively. The point is that whether one shifts from the abstract to the concrete, or vice versa, the logic is the same, involving the relationship between theoretical concepts and empirical indicators.

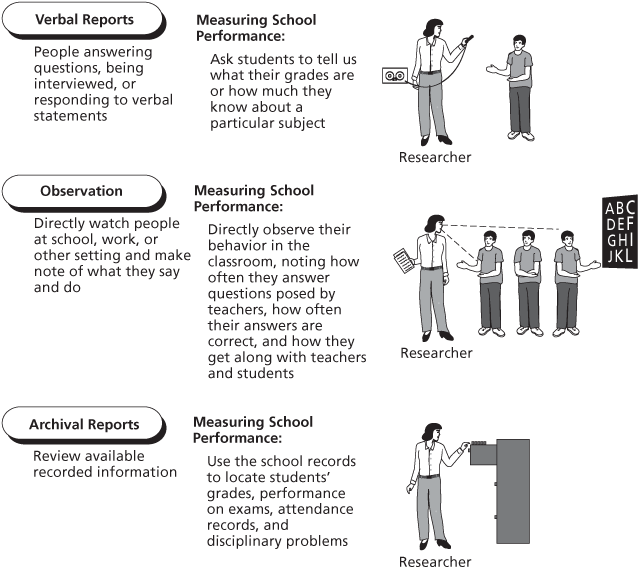

Techniques of MeasuringWe will discuss specific techniques for measuring variables in other chapters in this book, but we find that discussing these techniques briefly at this point helps make clear the issues surrounding measurement. Measurement techniques in the social sciences and human services vary widely, because the concepts we measure are so diverse. These techniques, however, mostly fall into one of three categories (see Figure 5.2).

Figure 5.2 The Major Strategies Used by Social Scientists to Measure Variables

Verbal reports. This is undoubtedly the most common measurement technique in social research. It involves people answering questions, being interviewed, or responding to verbal statements (see Chapters 7 and 9). For example, research on people’s attitudes typically uses this technique by asking people how they feel about commercial products, political candidates, or social policies. In a study of school performance, to mention another example, we could measure how well students do in school by asking them what their grades are or how much they know about a particular subject.

Observation. Social researchers also measure concepts by making direct observations of some phenomena (see Chapter 9). We watch people at school or at work and make notes of what they say and do. We may even make an audio or video recording as a way of preserving the observations. In a study of school performance, we could measure how well students do in school by directly observing their behavior in the classroom and noting how often they answer questions posed by teachers, how often their answers are correct, and how they get along with teachers and students.

Archival records. Researchers also use a variety of available recorded information to measure variables (see Chapter 8). These records might take the form of statistical records, governmental or organizational documents, personal letters and diaries, newspapers and magazines, or movies and musical lyrics. All these archival records are the products of human social behavior and can serve as indicators of concepts in the social sciences. In the study of school performance, for example, a researcher could use school records to locate students’ grades, performance on exams, attendance records, and disciplinary problems as measures of how well they are doing in school.

These are the major ways that social scientists measure concepts. Researchers must specify exactly what aspects of verbal reports, observations, or available data will serve as indicators of the concepts they want to measure. In addition, researchers use some key criteria to help them decide whether a particular indicator is a good measure of some concept. These criteria will be discussed later in this chapter.

Positivist and Nonpositivist Views of MeasurementMuch of the foundation for measurement and operationalization in the social sciences derives from the work of statisticians, mathematicians, philosophers, and scientists in a field called classical test theory or measurement theory (Bohrnstedt 1983; Stevens 1951), which provides the logical foundation for issues discussed in this chapter and derives largely from the positivist view of science discussed in Chapter 2. The logic of measurement can be described by the following formula:

X = T + E

In this formula, X represents our observation or measurement of some phenomenon; it is our indicator. It might be the grade on an exam in a social research class, for example, or a response to a self-esteem scale (see Table 5.1). Also in this formula, T represents the true, actual phenomenon that we are attempting to measure with X; it would be what a student actually learned in a social research class or what his or her true self-esteem is. The third symbol in the formula, E, represents any measurement error that occurs, or anything that influences X other than T. It might be the heat and humidity in the classroom on the day of the social research exam, which made it difficult to concentrate, or it could reflect the fact that a subject incorrectly marked a choice on the self-esteem scale, inadvertently circling a response that indicated higher or lower self-esteem than he or she actually possessed.

Table 5.1 Elements in the Process of Measurement

| X | T | E | ||

| Observation | True Phenomenon | Error | ||

| Reading on a weight scale | Your actual weight | Clothing you are wearing; Heavy object in your pocket | ||

| Grade on an examination in social research class | Actual knowledge you acquired in social research class | Heat and humidity in test room; Distraction due to fight with partner | ||

| Score on a scale measuring self-esteem | Your actual level of self-esteem | Incorrectly marking a self-esteem scale; Questions on self-esteem scale that are difficult to understand |

The formula is very simple—but also very profound and important: Our measurement of any phenomenon is a product of the characteristics or qualities of the phenomenon itself and any errors that occur in the measurement process. What we strive for is measurement with no error:

E = 0

and, therefore,

X = T

The ideal to strive for is a measurement of the phenomenon that is determined only by the true state of the phenomenon itself. Scientists recognize, however, that they normally cannot achieve this ideal state in its entirety. In reality, we attempt to reduce E as much as possible. Later in this chapter, we will complicate this measurement formula a bit, but for now, it can stand as a shorthand way of understanding the process of measurement.

Before going deeper into the process of measurement, it is important to consider the nonpositivists’ critique of classical measurement theory. Many nonpositivists argue that we haven’t examined a huge assumption in this at all, one that may render the entire topic somewhat problematic. The assumption is that the phenomenon being measured (T) exists objectively in the world and that our measurement device is merely discovering it and its properties. Some things do exist in the world independently of our perceptions and our judgments about them. The computer monitor on which these words are being written, for example, has a screen that is nine inches tall—we just measured it with a ruler. Our measurement of it was a discovery of its properties, and the measurement process did not create or change those properties. Now, however, think about a social science concept, such as self-esteem. We measure it by asking subjects to agree or disagree with a series of statements. We score a “strongly agree” response as “4” and a “strongly disagree” response as “1”; then, we sum up those responses to all the separate items in the scale and give a self-esteem score that ranges from 10 to 40. What, however, is the objective reality behind this measurement? If a subject receives a score of 32 on our measurement device, what does that 32 correspond to in his or her subjective world, or mind, or consciousness? The 32 is the X in our measurement formula, but what is the T that it corresponds to? Is the link between the measurement of a computer screen and its actual length as direct as the link between the score of 32 on the self-esteem measure and the actual subjective experience of self?

The nonpositivists argue that many social science concepts do not have such clear and objective referents in the world. Our concepts are based on an intuitive and theoretical understanding of what parts of the world are like. In other words, we are constructing the world, not just discovering it. We believe that something like self-esteem exists, but it is our construction of it that we measure with the self-esteem scale, not the thing itself (if the thing itself even exists). This does not make measurement theory useless, but it does suggest that the whole process is more complicated—and not nearly as objective—as the positivists suggest. Nonetheless, many nonpositivists agree that some social science measurement can follow the model of measurement theory. Some social phenomena, such as age and sex, do have some objective existence in the world. A person’s age has something to do with how many times the earth has circled the sun since his or her birth, and sex has something to do with a person’s physical genitalia. The social significance of these characteristics is another matter, of course, but in many cases, the measurements of age and sex can follow classical measurement theory. Research in Practice 5.1 addresses some of these measurement issues in regard to the significant social issue of domestic violence.

A major problem in most measurement has to do with which indicators to use in a particular research project. This depends in part, of course, on theoretical concerns, but there are other matters to consider as well. One such matter has to do with whether a particular measure permits one to perform mathematical operations on it; we turn to this issue next.

Levels of MeasurementWe have seen just a few of the many ways of measuring phenomena, such as asking questions or noting observations. Measures differ from one another in terms of what is called their level of measurement, or the rules that define permissible mathematical operations that can be performed on a set of numbers produced by a measure. There are four levels of measurement: nominal, ordinal, interval, and ratio. If we keep in mind that variables can take on different values, then measurement basically involves assessing the value or category into which a particular entity falls. Measuring age, for example, is the process of placing each person into a particular age category.

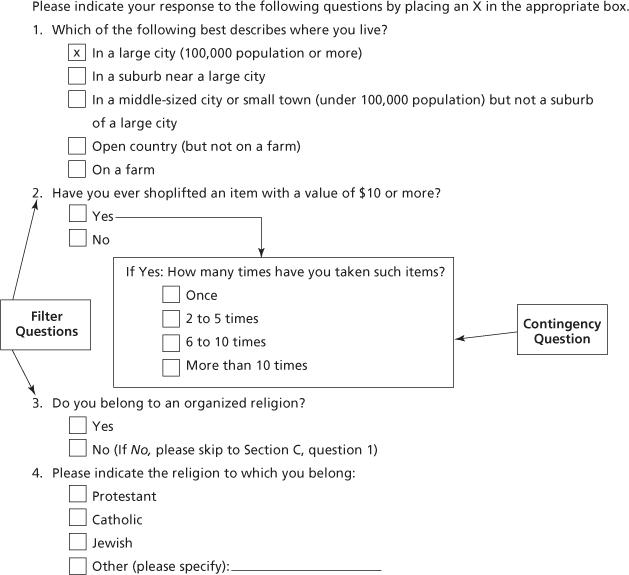

Nominal MeasuresNominal measures classify observations into mutually exclusive and exhaustive categories. They represent nominal variables at the theoretical level. Variables such as sex, ethnicity, religion, or political party preference are examples. Thus, we might classify people according to their religious affiliation by placing them into one of five categories: Protestant, Catholic, Jewish, other, or no religious affiliation. These are mutually exclusive categories, because membership in one precludes membership in another. They are exhaustive categories because there is a category for every possible case (for this measure of religious affiliation, the “other religion” and “no religion” categories assure this). For purposes of data analysis, we might assign numbers to represent each of the categories. We could label Protestant as 1, Catholic as 2, Jewish as 3, other as 4, and no religious affiliation as 5. It is important to recognize, however, that the assignment of numbers is purely arbitrary; the numbers making up a nominal measure have none of the properties, such as ranking, ordering, and magnitude, that we usually associate with numbers. None of the usual arithmetic operations, such as adding, subtracting, multiplying, or dividing, can legitimately be performed on numbers in a nominal scale. The reason for this is that the numbers in a nominal scale are merely symbols or labels used to identify a category of the nominal variable. We could just as easily have labeled Protestant 2 as 1.

Research in Practice 5.1: Behavior and Social Environment: Controversies in Measuring Violence against Women

An extensive body of literature has accumulated regarding the topic of violence against women. In the process of building this knowledge base, considerable disagreement has arisen about which harmful behaviors to include in a definition of nonlethal violence and how best to go about measuring this violence. Consider the two following excerpts, the first from a qualitative study and the second from a summary of a national, randomized survey:

I was raped by my uncle when I was 12 and my husband has beat me for years. For my whole life, when I have gone to a doctor, to my priest, or to a friend to have my wounds patched up, or for a shoulder to cry on, they dwell on my bruises, my cuts, my broken bones. The abuse in my life has taken away my trust in people and in life. It’s taken away the laughter in my life. I don’t trust myself to be able to take care of my kids, to take care of myself, to do anything to make a difference in my own life or anyone else’s. That’s the hurt I would like to fix. I can live with the physical scars.

It’s these emotional scars that drive me near suicide sometimes.

A respondent interviewed by DeKeseredy and MacLeod (1997, p. 5)

Women experience significantly more partner violence than men do: 25 percent of surveyed women, compared with 8 percent of surveyed men, said they were raped and/or physically assaulted by a current or former spouse, cohabiting partner, or date in their lifetime; 1.5 percent of surveyed women and 0.9 percent of surveyed men said they were raped and/or physically assaulted by such a perpetrator in the previous 12 months. According to survey estimates, approximately 1.5 million women and 834,700 men are raped and/or physically assaulted by an intimate partner annually in the United States.

Tjaden and Thoennes (1998, p. 2)

The gut-wrenching words of a violence survivor or the decimal precision of an executive summary: Which approach is the better measure of domestic violence? The qualitative study vividly portrays one person’s experience, an experience with which many victims can identify. The survey lacks the rich description but appears to capture the immensity of the problem in terms of numbers of victims. The two approaches have fueled a debate over what focus to use when we attempt to measure violence against women.

Traditionally, many survey researchers have used operational definitions that include physical abuse indicators, such as beatings or kicks, or sexual assault features, such as forced penetration. For example, the Conflict Tactics Scale asks people to indicate how often a partner has “used a knife or gun on them” or “beat them up” (Straus et al. 1996). An argument in favor of such an approach is that it lends itself to readily quantifiable measures. One can count the number of times a victim was beaten, the number of visits to the emergency room, or the number of workdays lost because of injury. Standardized instruments such as the Conflict Tactics Scale permit researchers to make comparisons across studies and with different populations. So, in the case of the survey quoted above, the researchers can estimate the number of women who were raped or physically assaulted, and the results can be used in conjunction with those of other surveys to estimate the extent of the problem.

Is this really, however, what is most important? The victim who is quoted in the qualitative study makes an eloquent plea to focus on the psychological hurt that she endures forever as a consequence of living with an abusive partner rather than counting the number of assaults or physical injuries that happened. In an article discussing definition and measurement issues, Walter DeKeseredy (2000) points out that many North American surveys have followed a narrow definition, based in part on the argument that grouping physical assault with psychological, spiritual, and economic abuse muddies the water and makes causal determination impossible. Another argument is that to include “soft” abuse, such as verbal aggression and psychological damage, trivializes what most people agree is seriously abusive. In contrast, many researchers, especially those using qualitative methods, contend that violence against women is much more than just physical blows, that it is multidimensional and such actions as harming pets, threatening children, and verbal degradation also are essential elements. The qualitative data presented above can be part of a convincing argument that the psychological damage resulting from abuse is far from trivial.

In fact, when it comes to estimating the amount of violence, DeKeseredy argues that narrow definitions generate low incidence and prevalence rates and that these constitute a significant problem. He points out that policymakers react only to large numbers; thus, underestimating the amount of abuse may have important policy implications. Furthermore, narrow definitions create a ranking of abuse based on what is defined as crime rather than on women’s true feelings. Finally, narrow definitions increase the problem of underreporting, because research participants will only disclose abuse that fits the narrow definition rather than include other experiences that hurt them deeply. Although it may be problematic to include a wide array of abusive experiences, DeKeseredy points out that qualitative research, such as that quoted above, emphasizes the need to incorporate into survey research the features of violence that women find so devastating. As the debate developed, qualitative research served as the catalyst for forcing the research community to broaden its definition of abuse. Several measurement tools, created partly in response to the work of qualitative researchers, now tap nonphysical and nonsexual abuse. These include Tolman’s (1989) Psychological Maltreatment of Women Inventory and the psychologically/emotionally abusive and controlling behaviors data elements developed by the National Center for Injury Prevention and Control, Centers for Disease Control and Prevention (Saltzman et al. 1999).

This debate over how to measure domestic violence shows the benefits of using both qualitative and quantitative research approaches and of considering both positivist and nonpositivist arguments about measurement. DeKeseredy, for example, makes a case for the use of multiple measures to further enhance measurement. He argues that using open-ended, supplemental questions in addition to such quantitative measures as the Conflict Tactics Scale increases the chance that silent or forgetful participants may reveal abuse not reported in the context of the structured, closed-ended instrument. In summary, we see that careful definition of terms, inclusion of both qualitative and quantitative research, improvement of measurement instruments, and use of multiple forms of measurement all advance our understanding of the dynamics of important social issues, such as violence against women.

Ordinal MeasuresWhen variables can be conceptualized as having an inherent order at the theoretical level, we have an ordinal variable and, when operationalized, an ordinal measure. Ordinal measures are of a higher level than nominal measures, because in addition to having mutually exclusive and exhaustive categories, the categories have a fixed order. Socioeconomic status, for example, constitutes an ordinal variable, and measures of socioeconomic status are ordinal scales. Table 5.2 illustrates how we might divide socioeconomic status into ordinal categories. With ordinal measurement, we can speak of a given category as ranking higher or lower than some other category; lower-upper class, for example, is higher than middle class but not as high as upper-upper class. It is important to recognize that ordinal measurement does not assume that the categories are equally spaced. For example, the distance between lower-upper class and upper-upper class is not necessarily the same as between lower-middle class and middle class, even though in both cases the classes are one rank apart. This lack of equal spacing means that the numbers assigned to ordinal categories do not have the numerical properties that are necessary for arithmetic operations. Like nominal scales, we cannot add, subtract, multiply, or divide ordinal scales. The only characteristic they have that nominal scales do not is the fixed order of the categories.

Table 5.2 Ordinal Ranking of Socioeconomic Status

| Category | Ranks |

| Upper-upper | |

| Lower-upper | |

| Upper-middle | |

| Middle | |

| Lower-middle | |

| Upper-lower | |

| Lower-lower |

The next highest level of measurement is interval. Interval measures share the characteristics of ordinal scales—mutually exclusive and exhaustive categories and an inherent order—but have equal spacing between the categories. Equal spacing comes about because some specific unit of measurement, such as a degree on a temperature scale, is a part of the measure. Each of these units has the same value, which results in the equal spacing. We have an interval scale if the difference between scores of, say, 30 and 40 is the same as the difference between scores of, say, 70 and 80. A 10-point difference is a 10-point difference regardless of where on the scale it occurs.

The common temperature scales, Fahrenheit and Celsius, are true interval scales. Both scales have, as units of measurement, degrees and the equal spacing that is characteristic of interval scales. A difference of 10 degrees is always the same, no matter where it occurs on the scale. These temperature scales illustrate another characteristic of true interval scales: The point on the scale labeled zero is arbitrarily selected. Neither 0°C nor 0°F is absolute zero, the complete absence of heat. Because the zero point is arbitrary in true interval scales, we cannot make statements concerning ratios—that is, we cannot say that a given score is twice or three times as high as some other score. For example, a temperature of 80°F is not twice as hot as a temperature of 40°F. Despite not having this ratio characteristic, interval scales have numbers with all the other arithmetic properties. If we have achieved interval-level measurement, then we can legitimately perform all the common arithmetic operations on the numbers.

Considerable controversy exists over which measures used in behavioral science research are true interval measures; only a few measures are clearly of interval level. For example, one that is relevant to the human services is intelligence as measured by IQ tests. The IQ tests have specific units of measurement—points on the IQ scale—and each point on the scale is mutually exclusive. Furthermore, the distance between IQs of 80 and 90 is equivalent to the distance between IQs of 110 and 120. An IQ scale has no absolute zero point, however, so we cannot say that a person with an IQ of 150 is twice as intelligent as a person with an IQ of 75. As with temperature scales, the IQ scale is, in part, an arbitrary construction that allows us to make some comparisons but not others.

Beyond a few measures such as intelligence, however, the debate ensues. Some researchers argue, for example, that we can treat attitude scales as interval scales (Kenny 1986). The questions that make up attitude scales commonly involve choosing one of five responses: strongly agree, agree, uncertain, disagree, or strongly disagree. The argument is that people see the difference between “strongly agree” and “agree” as roughly equivalent to the distance between “disagree” and “strongly disagree.” This perceived equidistance, some argue, makes it possible to treat these scales as interval measures. Other researchers argue that there is no logical or empirical reason to assume that such perceived equidistance exists and, therefore, that we should always consider attitude scales as ordinal rather than interval measures.

We do not presume to settle this debate here. Rather, we raise the issue because level of measurement influences which statistical procedures to use at the data analysis stage of research. (This matter is discussed in Chapters 14 and 15.) The results of research in which the researcher used an inappropriate statistical procedure for a given level of measurement should be viewed with caution.

Ratio MeasuresThe highest level of measurement is ratio. Ratio measures have all the characteristics of interval measures, but the zero point is absolute and meaningful rather than arbitrary. As the name implies, with ratio measures, we can make statements to the effect that some score is a given ratio of another score. For example, one ratio variable with which human service workers are likely to deal with is income. With income, the dollar is the unit of measurement. Also, as many are all too well aware, there is such a thing as no income at all, so the zero point is absolute. Thus, it is perfectly legitimate to make statements such as this about income: An income of $20,000 is twice as much as $10,000 but only one third as much as $60,000. (We recognize, of course, that income is a ratio measure only as an indicator for the amount of money that is available to a person; if income is used as a measure of a person’s social status, for example, then a difference between $110,000 and $120,000 does not necessarily represent a shift in status equivalent to that between $10,000 and $20,000.) Given that ratio scales have all the characteristics of interval scales, we can, of course, perform all arithmetic operations on them.

We summarize the characteristics of the four levels of measurement in Table 5.3. Keep in mind that, even though researchers have no control over the nature of a variable, they do have some control over how they define a variable, at both the nominal and operational levels, and this affects the level of measurement. It sometimes is possible to change the level of measurement of a variable by redefining it at the nominal or the operational level. This is important, because researchers generally strive for the highest possible level of measurement: Higher levels of measurement generally enable us to measure variables more precisely and to use more powerful statistical procedures (see Chapters 14 and 15). It also is desirable to measure at the highest possible level because it gives the researcher the most options: The level of measurement can be reduced during the data analysis, but it cannot be increased. Thus, choosing a level of measurement that is too low introduces a permanent limitation into the data analysis.

Table 5.3 The Characteristics of the Four Levels of Measurement

|

| Characteristics of Categories | |||

| Level of Measurement | Mutually Exclusive and Exhaustive | Possesses a Fixed Order | Equal Spacing between Ranksa | True Zero Pointa,b |

| Nominal |

|

|

| |

| Ordinal |

|

| ||

| Interval |

| |||

| Ratio | ||||

| y = possesses that characteristic | ||||

aPermits standard mathematical operations of addition, subtraction, multiplication, and division.

bPermits statements about proportions and ratios.

The primary determinant for the level of measurement, however, is the nature of the variable we want to measure. The major concern is an accurate measure of a variable (a topic we will discuss at length in the next section). Religious affiliation, for example, is a nominal variable, because that is the nature of the theoretical concept of “religious affiliation.” There is no way to treat religious affiliation as anything other than a merely nominal classification, but by changing the theoretical variable somewhat, we may open up higher levels of measurement. If, instead of religious affiliation, we were to measure religiosity, or the strength of religious beliefs, then we would have a variable that we could conceptualize and measure as ordinal and, perhaps, even as interval. On the basis of certain responses, we could easily rank people into ordered categories of greater or lesser religiosity. Thus, the theoretical nature of the variable plays a large part in determining the level of measurement. This illustrates, once again, the constant interplay between theoretical and research levels (see Figure 5.1). The decision regarding level of measurement at the research level might affect the conceptualization of variables at the theoretical level.

Finally, note that nominal variables are not inherently undesirable. The impression that variables capable of measurement at higher levels are always better than nominal variables is wrong. The first consideration is to select variables on theoretical grounds, not on the basis of their possible level of measurement. Thus, if a research study really is concerned with religious affiliation and not with religiosity, then the nominal measure is the correct one to use and not a measure of religiosity (even though it is ordinal or, possibly, interval). Also, researchers do strive for more accurate and powerful measurement. Other things being equal, a researcher who has two measures available, one ordinal and one interval, generally prefers the interval measure.

Discrete versus Continuous VariablesIn addition to considering the level of measurement of a variable, researchers also distinguish between variables that are discrete or continuous. Discrete variables have a finite number of distinct and separate values. A perusal of a typical client fact sheet from a human service agency reveals many examples of discrete variables, such as sex, household size, number of days absent, or number of arrests. Household size is a discrete variable because households can be measured only in a discrete set of units, such as having one member, two members, and so on; no meaningful measurement values lie between these distinct and separate values. Continuous variables, at least theoretically, can take on an infinite number of values. Age is a continuous variable because we can measure age by an infinite array of values. We normally measure age in terms of years, but theoretically, we could measure it in terms of months, weeks, days, minutes, seconds, or even nanoseconds! There is no theoretical limit to how precise the measurement of age might be. For most social science purposes, the measurement of age in terms of years is quite satisfactory, but age is nonetheless a continuous variable.

Nominal variables are, by definition, discrete in that they consist of mutually exclusive or discrete categories. Ordinal variables also are discrete. The mutually exclusive categories of an ordinal variable may be ranked from low to high, but there cannot be a partial rank. For example, in a study of the military, rank might be ordered as 1 = private, 2 = corporal, and so on, but it would be nonsensical to speak of a rank of 1.3. In some cases, interval and ratio variables are discrete. For example, family size or number of arrests are whole numbers or discrete intervals. (We can summarize discrete interval and ratio data by saying, for example, that the average family size is 1.8 people, but this is a summary statistic, not a measurement of a particular household.) Many variables at the interval and ratio level are continuous, at least at the theoretical level. A researcher may settle for discrete indicators either because the study does not demand greater precision or because no tools exist that can measure the continuous variable with sufficient reliability. In some cases, researchers debate over whether a particular variable is discrete or continuous in nature. For example, we used social class as an illustration of an ordinal variable, suggesting that several distinct classes exist. Some argue that social class is inherently a continuous interval variable and that we only treat it as ordinal because of the lack of instruments that would permit researchers to measure it reliably as a true continuous, interval variable (Borgatta and Bohrnstedt 1981).

A variable, then, is continuous or discrete by its very nature, and the researcher cannot change that. It is possible to measure a continuous variable by specifying a number of discrete categories, as we typically do with age, but this does not change the nature of the variable itself. Whether variables are discrete or continuous may influence how we use them in data analysis. Knowing the level of measurement and whether variables are discrete or continuous has implications for selecting the best procedures for analyzing the data.

Evaluating MeasuresWe have seen that there normally are a number of indicators, sometimes a large number and at different levels of measurement, that we can use to measure a variable, but how do we choose the best of these measures for a particular study? A number of factors come into play in making this decision, including matters of feasibility (discussed in Chapter 4). Here, we want to discuss two additional and very important considerations in this regard—that is, the validity and reliability of measures (Alwin 2007).

ValidityValidity refers to the accuracy of a measure: Does it accurately measure the variable it is intended to measure? If we were developing a measure of self-concept, a major concern would be whether our measuring device measures the concept as it is theoretically defined. There must be a fairly clear and logical relationship between the way that a variable is nominally defined and the way that it is operationalized. For example, if we propose to measure self-concept on the basis of how stylishly people dress, then we probably would have an invalid measure. Many factors influence the way that people dress at any given time. The slight possibility that one of these factors might have something to do with self-concept is not sufficient to make the suggested measure valid. The validity of measures is very difficult to demonstrate with any finality. Several approaches to the question of validity exist, however, and they can offer evidence regarding the validity of a measure.

Face validity involves assessing whether a logical relationship exists between the variable and the proposed measure. It essentially amounts to a rather commonsense comparison of what makes up the measure and the theoretical definition of the variable: Does it seem logical to use this measure to reflect that variable? We might measure child abuse in terms of the reports that physicians or emergency room personnel make concerning injuries suffered by children. This is not a perfect measure, because health personnel might be wrong. It does, however, seem logical that an injury such people report might reflect actual abuse.

No matter how carefully done, face validity clearly is subjective in nature. All we have is logic and common sense as arguments for the validity of a measure. This makes face validity the weakest demonstration of validity, and it usually should be considered as no more than a starting point. All measures must pass the test of face validity. If they do, then we should attempt one of the more stringent methods for assessing validity.

An extension of face validity is called content validity, or sampling validity, which has to do with whether a measuring device covers the full range of meanings or forms that are included in a variable to measure. In other words, a valid measuring device provides an adequate, or representative, sample of all content, or elements, or instances, of the phenomenon being measured. For example, if one were measuring general self-esteem, it would be important to recognize that self-esteem can relate to many realms of people’s lives, such as work, school, or the family. Self-esteem might get expressed or come into play in all those settings. A valid measure of self-esteem, then, would take that variability into account. If a measure of self-esteem consisted of a series of statements to which people expressed degrees of agreement, then a valid measure would include statements that relate to those many settings in which self-esteem might be expressed. If all the statements in the measuring device had to do, say, with school, then it would be a less-valid measure of general self-esteem.

Content validity is a more extensive assessment of validity than is face validity, because it involves a detailed analysis of the breadth of the measured concept and its relationship to the measuring device. Content validity involves two distinct steps: (1) determining the full range or domain of the content of a variable and (2) determining whether all those domains are represented among the items that constitute the measuring device. It is still a somewhat subjective assessment, however, in that someone has to judge what the full domain of the variable is and whether a particular aspect of a concept is adequately represented in the measuring device. There are no agreed-on criteria that determine whether a measure has content validity. Ultimately, it is a judgment, albeit a more carefully considered judgment than occurs with face validity.

One way to strengthen confidence in face or content validity is to gather the opinions of other investigators, especially those who are knowledgeable about the variables involved, regarding whether particular operational definitions are logical measures of the variables. This extension of face or content validity, which sometimes is referred to as jury opinion, is still subjective, of course. Because more people serve as a check on bias or misinterpretation, however, jury opinion is superior to individual tests of face or content validity.

Criterion validity establishes validity by showing a correlation between a measurement device and some other criterion or standard that we know or believe accurately measures the variable under consideration. Or, we might correlate the results of the measuring device with some properties or characteristics of the variable that the measuring device is intended to measure. For example, a scale intended to measure risk of suicide, if it is to be considered valid, should correlate with the occurrence of self-destructive behavior. The key to criterion validity is to find a criterion variable against which to compare the results of the measuring device.

Criterion validity moves away from the subjective assessments of face validity and provides more objective evidence of validity. One type of criterion validity is concurrent validity, which compares the instrument under evaluation to some already-existing criterion, such as the results of another measuring device. (Presumably, any other measuring devices in this assessment have already been tested for validity.) Lawrence Shulman (1978), for example, used a form of concurrent validity to test an instrument intended to measure the practice skills of human service practitioners. This instrument consisted of a questionnaire in which clients rated the skills of practitioners. Shulman reasoned that clients would view more skilled practitioners as more helpful and that those practitioners would have more satisfied clients. Thus, Shulman looked for correlations between how positively clients rated a practitioner’s skills and the perceived helpfulness of practitioners or satisfaction of clients. These correlations offered evidence for the validity of the measure of practitioners’ skills.

Numerous existing measures can help establish the concurrent validity of a newly developed measure. (Following are only some of the compilations of such measures available in the social sciences and the human services: Bloom, Fischer, and Orme 2009; Corcoran and Fischer 2000; Fredman and Sherman 1987; Magura and Moses 1986; McDowell and Newell 1996; Miller and Salkind 2002; Robinson, Shaver, and Wrightsman 1991; Schutte and Malouff 1995; Touliatos et al. 2000.) More measures can be found in research articles in professional journals. In addition, the Consulting Psychologists Press and other organizations publish catalogs of measures they make available to assess a wide array of skills, behaviors, attitudes, and other variables. (This also suggests, as pointed out in Chapter 4, that a thorough review of the literature, undertaken before going through all the work of creating a new measure, may unearth an existing measure that meets one’s needs and has already been demonstrated to have adequate validity and reliability.) Then, it is a matter of applying both measures to the same sample and comparing the results. If a substantial correlation is found between the measures, we have reason to believe that our measure has concurrent validity. As a matter of convention, a correlation of r = .50 is considered to be the minimum required for establishing concurrent validity.

The inherent weakness of concurrent validity is the validity of the existing measure that is used for comparison. All we can conclude is that our measure is about as valid as the other one. If the measure that we select for comparison is not valid, then the fact that ours correlates with it hardly makes our measure valid. For this reason, researchers should use only those measures that have been established as being valid by research for comparison purposes in concurrent validity.

A second form of criterion validity is predictive validity, in which an instrument predicts some future state of affairs. In this case, the criteria that are used to assess the instrument are certain future events. The Scholastic Assessment Test (SAT), for example, can be subjected to predictive validity by comparing performance on the test with how people perform in college. If people who score high on the SAT do better in college than those who score low, then the SAT is, presumably, a valid measure of scholastic ability. Some measures are created for the specific purpose of predicting a given behavior, and these measures are obvious candidates for assessment by predictive validity. For example, researchers have attempted to develop a measure that can predict which convicted criminals are likely to revert to high involvement with crime after being released from prison (Chaiken and Chaiken 1984). Information about the number and types of crimes that people commit, the age at which they commit their first crime, and involvement with hard drugs serves as the basis for these predictions. Ultimately, a measure’s ability to make accurate predictions about who actually experiences high involvement with crime after release validates that measure.

Because this may require numerous applications and many years, the scales can be assessed initially for validity on the basis of their ability to differentiate between high and low crime involvement among current criminals. We expect that if a measure can make this differentiation, it also can predict future involvement in crime. This variation on predictive validity is the known groups approach to validity. If we know that certain groups are likely to differ substantially on a given variable, then we can use a measure’s ability to discriminate between these groups as an indicator of validity. Suppose, for example, we were working on a measure of prejudice. We might apply the measure to a group of ministers, whom we would expect to be low in prejudice, and to a group of people affiliated with the group Aryan Nation, whom we would expect to be high in prejudice. If these groups differed significantly in how they responded to the measurement instrument, then we would have reason to believe that the measure is valid. If the measure failed to show a substantial difference, we would certainly have doubt about its validity.

Despite the apparent potential of the known groups approach, it does have its limitations. Frequently, no groups are known to differ on the variable that we are attempting to measure. In fact, the purpose of developing a measure often is to allow the identification of groups who do differ on some variable. Thus, we cannot always use the known groups technique. When we do, we also have to consider a further limitation—namely, that it cannot tell us whether a measure can make finer distinctions between less-extreme groups than those used in the validation. Perhaps the measure of prejudice just described shows the members of Aryan Nation to be high in prejudice and the ministers to be low. With a broader sample, however, the measure may show that only the Aryan Nation members score high and that everyone else, not just ministers, scores low. Thus, the measure can distinguish between groups only in a very crude fashion.

Construct validity, the most complex of the types of validity that we discuss here, involves relating an instrument to an overall theoretical framework to determine whether the instrument is correlated with all the concepts and propositions that comprise the theory (Cronbach and Meehl 1955). In this case, instruments are assessed in terms of how they relate not to one criterion but, rather, to the numerous criteria that can be derived from some theory. For example, if we develop a new measure of socioeconomic status, we can assess construct validity by showing that the new measure accurately predicts the many hypotheses that can be derived from a theory of occupational attainment. In the theory, numerous propositions would relate occupational attainment and socioeconomic status to a variety of other concepts. If we do not find some or all of the predicted relationships, then we may question the validity of the new measuring instrument. (Of course, it may be that the theory itself is flawed; this possibility must always be considered when assessing construct validity.)

Construct validity takes some very complex forms. One is the multitrait—multimethod approach (Campbell and Fiske 1959). This is based on two ideas: First, two instruments that are valid measures of the same concept should correlate rather highly with each other even though they are different instruments. Second, two instruments, even if similar to each other, should not correlate highly if they measure different concepts. This approach to validity involves the simultaneous assessment of numerous instruments (multimethod) and numerous concepts (multitrait) through the computation of intercorrelations. Wolfe and colleagues (1987) used this technique to assess the validity of children’s self-reports about negative emotions, such as aggressiveness and depression. The point is that assessing construct validity can become highly complex, but this complexity offers greater evidence for the validity of the measures.

Eye on Ethics: The Ethics of Measuring Shameful, Harmful, or Deviant Behavior

How do you validly measure people’s willingness to engage in shameful, even harmful behavior in an experiment and remain ethical? For many, Stanley Milgram’s classic study on obedience to authority (Milgram 1974) exemplifies exposing participants to unacceptable harm in the conduct of research. Milgram set up a teaching laboratory where participants were told that the study concerned the effects of punishment on learning. Each participant was led to believe that he had been assigned the role of teacher who would administer electrical shocks to a “learner” in order to enhance learning. There was an imposing-looking shock generator machine, complete with red warning danger labels and appearing to administer electrical shocks up to 450 volts in 15-volt increments. Milgram measured how far up the scale participants would go in administering shocks as the “learner” on the other side of the wall expressed more distress with the increase in voltage, finally falling silent as if severely injured. The disturbing result was that 65 percent of participants continued administering shocks all the way to the maximum 450 volts.

Milgram’s last studies were conducted about the time that the U. S. Department of Health, Education, and Welfare was establishing its ethical guidelines and mandating Institutional Review Boards (see Chapter 3). Given the furor over perceived risk of psychological harm raised by Milgram’s work, it seemed unlikely that anyone would ever gain approval for similar studies from a modern IRB. But the question Milgram examined is still of vital interest today. The Holocaust and Abu Ghraib cause us to continue asking how people can engage in inhumane behavior.

Recently, psychologist Jerry Burger sufficiently addressed ethical concerns that he was able to secure IRB approval and thus conduct a modern replication of Milgram’s research. Burger resolved the ethical issues by modifying the dependent variable measurement through what he calls the “150 Volt Solution” (Burger 2009, p.2). Previous studies showed that about 79 percent of participants who were willing to administer shocks of 150 volts continued on through the full range to 450 volts. Burger argued that little additional data was gained by subjecting participants to demands to administer higher and higher levels of shock. He argued that the 150-volt limit was a valid measure of people’s willingness to engage in behavior that was very harmful to other people, but that it was ethical because it did not subject people to the great stress of thinking they were administering very large shocks. The researcher included other safeguards in order to gain IRB approval: a screening process for applicants, multiple reminders that they could withdraw their participation, immediate debriefing, and presence of a clinical psychologist who was instructed to end the session immediately at the sign of participant distress. So what did the study show? As with Milgram, most of Burger’s participants complied with the authority figure’s instructions and delivered the maximum shock.

The types of validity we have discussed so far—face, content, criterion, and construct—involve a progression in which each builds on the previous one. Each type requires more information than the prior ones but provides a better assessment of validity. Unfortunately, many studies limit their assessment to content validity, with its heavy reliance on the subjective judgments of individuals or juries. Although this sometimes is necessary, measures subjected only to content validity should be used with caution. The Eye on Ethics section discusses some ethical considerations that can arise in trying to develop valid measures of some behaviors.

ReliabilityIn addition to validity, measures also are evaluated in terms of their reliability, which refers to a measure’s ability to yield consistent results each time it is applied. In other words, reliable measures only fluctuate because of variations in the variable being measured. An illustration of reliability can be found at any carnival, where there usually is a booth with a person guessing people’s weights within a certain range of accuracy—say, plus or minus three pounds. The customer essentially bets that the carnie’s ability to guess weights is sufficiently unreliable that his or her estimate will fall outside the prescribed range, and if so, the customer will win a prize. A weight scale, of course, is a reliable indicator of a person’s weight because it records roughly the same weight each time the same person stands on it, and the carnie provides such a scale to assess his or her guess of the customer’s weight. Despite the fact that carnies who operate such booths become quite good at guessing weights, they do occasionally guess wrong—influenced, perhaps, by aspects of the customer other than his or her actual weight, such as loose clothing that obscures a person’s physique.

In general, a valid measure is reliable. So, if we were certain of the validity of a measure, then we would not need to concern ourselves with its reliability. Evidence of validity, however, is always less than perfect, and this is why we turn to other ways of evaluating measures, including reliability. Reliability gives us more evidence for validity, because a reliable measure may be valid. Fortunately, we can demonstrate reliability in a more straightforward manner than we can demonstrate validity. Many specific techniques exist for estimating the reliability of a measure, but all are based on one of two principles—namely, stability and equivalence. Stability is the idea that a reliable measure should not change from one application to the next, assuming that the concept being measured has not changed. Equivalence is the idea that all items that make up a measuring instrument should measure the same thing and, thus, be consistent with one another. The first technique for estimating reliability, test—retest reliability, uses the stability approach; the others discussed use the equivalence principle.

Test—RetestThe first and most generally applicable assessment of reliability is called test—retest. As the name implies, this technique involves applying a measure to a sample of people and then, somewhat later, applying the same measure to the same people again. After the retest, we have two scores on the same measure for each person, as illustrated in Table 5.4. We then correlate these two sets of scores with an appropriate statistical measure of association (see Chapter 15). Because the association in test—retest reliability involves scores obtained from two identical questionnaires, we fully expect a high degree of association. As a matter of convention, a correlation coefficient of .80 or better normally is necessary for a measure to be considered as reliable. In Table 5.4, the r means that the particular statistic used was the Pearson’s correlation coefficient, and the value of .98 indicates that the measurement instrument is highly reliable according to the test—retest method.

Table 5.4 Hypothetical Test-Retest Data

| Subjects | Initial Test | Retest |

| 12 | 15 | |

| 15 | 20 | |

| 22 | 30 | |

| 38 | 35 | |

| 40 | 35 | |

| 40 | 38 | |

| 40 | 41 | |

| 60 | 55 | |

| 70 | 65 | |

| 10 | 75 | 77 |

| r = .98 | ||

Lawrence Shulman (1978), in addition to subjecting his measure of practice skills to the tests of validity mentioned earlier, also tested its reliability. He did so by sending versions of the questionnaire to a set of clients and then sending an identical questionnaire two weeks later to the same clients. This provided him with a test—retest assessment of reliability, and he obtained a correlation coefficient of .75. When a reliability coefficient is close to the conventional level, such as in this case, then the researcher must make a judgment about whether to assume that the instrument is reliable (and the low coefficient is a result of factors other than the unreliability of the instrument) or to rework the instrument to obtain higher levels of association.

In actual practice, we cannot simply use the test—retest method as suggested, because exposing people to the same measure twice creates a problem known as multiple-testing effects (Campbell and Stanley 1963). Whenever we apply a measure to a group of people a second time, they may not react to it the same as they did the first time. They may, for example, recall their previous answers, and that could influence their second response. People might respond as they recall doing the first time to maintain consistency, or people might purposely change responses for the sake of variety. Either case can have a confounding effect on testing reliability. If people strive for consistency, then their efforts can mask actual unreliability in the instrument. If they deliberately change responses, then a reliable measure can appear to be less reliable.

A solution to this dilemma is to divide the test group randomly into two groups: an experimental group to test twice, and a control group to test only once. Table 5.5 illustrates the design for such an experiment. Ideally, the measure will yield consistent results in all three testing sessions; if it does, then we have solid reason to believe the measure is reliable. On the contrary, substantial differences among the groups may indicate unreliability. If, for example, the experimental group shows consistency in both sets of responses to the measurement instrument but the control group differs, then the measure may be unreliable and the consistency of the experimental group might result from the multiple-testing effects. On the contrary, if the experimental group yields inconsistent results but the control group shows responses similar to those of the experimental group’s initial test, this outcome also may be caused by multiple-testing effects and result from the experimental group’s changing answers during the retest. Despite the inconsistency in the experimental group, the measure still might be reliable if we observe this outcome. Finally, we may see that the results of all three testing sessions appear to be inconsistent. Such an outcome would suggest that the measure is not reliable. If either of the outcomes that leave the reliability of the measure in doubt occurs, researchers should conduct a second test—retest experiment with the hope of obtaining clearer results. If the same result occurs, then we should redesign the instrument.

Table 5.5 Design for Test-Retest

|

| Initial Test | Retest |

| Experimental group | Yes | Yes |

| Control group | No | Yes |

The test—retest method of assessing reliability has both advantages and disadvantages. Its major advantage is that we can use it with many measures, which is not true of alternative tests of reliability. Its disadvantage is that it is slow and cumbersome to use, with two required testing sessions and the desirability of a control group. In addition, as we have seen, the outcome may not be clear, leading to the necessity of repeating the whole procedure. Finally, we cannot use the test—retest method on measures of variables whose value might have changed during the interval between tests. For example, people’s attitudes can change for reasons that have nothing to do with the testing, and a measure of attitudes might appear to be unreliable when it is not.

Multiple FormsIf our measuring device is a multiple-item scale, as often is the case, we can approach the question of reliability through the technique of multiple forms. When developing the scale, we create two separate but equivalent versions made up of different items, such as different questions. We then administer these two forms successively to the same people during a single testing session. We correlate the results from the forms, as in test—retest, using an appropriate statistical measure of association, with the same convention of r = .80 or better required for establishing reliability. If the correlation between the two forms is sufficiently high, then we can assume that each scale is reliable.

Multiple forms have the advantages of requiring only one testing session and of needing no control group. These may be significant advantages if using either multiple-testing sessions or a control group is impractical. In addition, we need not worry about changes in a variable over time, because both forms are administered at the same time.

The multiple-forms technique relies on the two forms appearing to the respondents as though they were only one, long measure so that the respondents do not realize they are really taking the same test twice. This necessity of deluding people points out one of the disadvantages of multiple forms: To maintain the equivalence of the forms, the items in the two forms probably will be quite similar—so similar, in fact, that people may realize they are responding to essentially the same items twice. If this occurs, it raises the specter of multiple-testing effects and casts doubt on the accuracy of the reliability test. Another disadvantage of multiple forms is the difficulty of developing two measures with different items that really are equivalent. If we obtain inconsistent results from the two forms, it may be caused by differences in the forms rather than by the unreliability of either one. In a way, it is questionable whether multiple forms really test reliability and not just our ability to create equivalent versions of the same measure.

Internal Consistency ApproachesInternal consistency approaches to reliability use a single scale that is administered to one group of people to develop an estimate of reliability. For example, in the split-half approach to reliability, the test group responds to the complete measuring instrument. We then randomly divide the responses to the instrument into halves, treating each half as though it were a separate scale. We correlate the two halves by using an appropriate measure of association. Once again, we need a coefficient of r = .80 or better to demonstrate reliability. In his study of practice skills mentioned earlier, Shulman (1978) used a split-half reliability test on his instrument in addition to the test—retest method. He divided each respondent’s answers to his questions about practitioners’ skills into two roughly equivalent sets, correlated the two sets of answers, and found a correlation (following a correction, to be mentioned shortly) of .79. This is an improvement over the reliability that he found with the test—retest method, and it comes very close to the conventional level of .80.

One complication in using the split-half reliability test is that the correlation coefficient may understate the reliability of the measure because, other things being equal, a longer measuring scale is more reliable than a shorter one. Because the split-half approach divides the scale in two, each half is shorter than the whole scale and, thus, will appear to be less reliable than the scale as a whole. To correct for this, we can adjust the correlation coefficient by applying the Spearman—Brown formula, which Shulman did:

Where:

ri = uncorrected correlation coefficient

r = corrected correlation coefficient (reliability coefficient)

To illustrate the effect of the Spearman—Brown formula, suppose we have a 20-item scale with a correlation between the two halves of ri = .70, which is smaller than the minimum needed to demonstrate reliability. The Spearman—Brown formula corrects as follows:

It can be seen that the Spearman—Brown formula has a substantial effect, increasing the uncorrected coefficient from well below .80 to just over it. If we had obtained these results with an actual scale, we would conclude that its reliability was now adequate.

Using the split-half technique requires two preconditions that can limit its applicability. First, all the items in the scale must measure the same variable. If the scale in question is a jumble of items measuring several different variables, then it is meaningless to divide it and compare the halves. Second, the scale must contain a sufficient number of items so that, when it is divided, the halves do not become too short to be considered as scales themselves. A suggested minimum is 8 to 10 items per half (Goode and Hatt 1952, p. 236). Because many measures are shorter than these minimums, however, it may not be possible to assess their reliability with the split-half technique.

A number of other approaches to internal consistency reliability sometimes are used to overcome the weaknesses of the split-half approach. After all, the split-half approach only uses one random separation of the scale into two halves. Randomly dividing the items of a scale into halves could result in many different arrangements of items, and each would yield a slightly different correlation between the halves. One common approach to this problem is to use Cronbach’s alpha, which may be thought of as the average of all possible split-half correlations. Theoretically, the scale is divided into all possible configurations of two halves. Then, a correlation is computed for each possibility, and the average of those correlations is computed to derive alpha (Cronbach 1951). This is not actually how Cronbach’s alpha is calculated, but it does describe the logic of the procedure. Another approach to internal consistency reliability is to correlate each item in the scale with every other item and then use the average of these correlations as the measure of reliability. This also is done by correlating each item with the overall scale score. (Statistical packages such as SPSS contain procedures that will produce Cronbach’s alpha as well as other reliability tests based on inter-item correlations.)

Internal consistency reliability tests have several advantages. They require only one testing session and no control group. They also give the clearest indication of reliability. For these reasons, researchers prefer to use these methods of assessing reliability whenever possible. The only disadvantage, as we noted, is that we cannot always use them. Shulman’s approach teaches a lesson, however: Use more than one test, if possible, to assess both reliability and validity. These issues are sufficiently important that the expenditure of time is justified.

Measurement with Minority PopulationsResearchers often first assess the validity and reliability of measuring instruments by applying them to white, non-Hispanic respondents, because they find such people to be the most accessible. We should almost never assume, however, that such assessments can be generalized to minority populations (Becerra and Zambrana 1985; Tran and Williams 1994). The development of such instruments typically does not consider the unique cultural characteristics and attitudes of minorities. For some minorities, such as Asians and Hispanics, language differences mean that an English-language interview would have some respondents answering in a second language. Researchers cannot assume that such a respondent will understand words and phrases as well as—or in the same way as—a person for whom English is his or her first language. In addition, some concepts in English do not have a precise equivalent in another language.

It is important, therefore, to refine measuring instruments to assure that they are valid and reliable measures among minorities. A study of mental health among Native Americans, for example, had to drop the word “blue” as a descriptor of depression, because that word had no equivalent meaning among the Native Americans (Manson 1986). Researchers also had to add a category of “traditional healer” to a list of professionals to whom a Native American might turn for help. A study of Eskimos found that cultural context often caused different interpretations of questions. Because Eskimo culture emphasizes tolerance and endurance, Eskimos are less likely than Anglo-Americans to give in to pain by not working. A positive response from an Eskimo to a question like “Does sickness often keep you from doing your work?” is thus considered to be a much more potent indicator of distress than the same answer by an Anglo-American.

These illustrations should make clear that measurement in social research must be culturally sensitive. When conducting research on a group with a culture different from that of the researchers, the researchers can take a number of steps to produce more valid and reliable measurement instruments (Marin and VanOss Marin 1991; Tran and Williams 1994):

Researchers can immerse themselves in the culture of the group under study, experiencing the daily activities of life and the cultural products as the natives do.

Researchers should use key informants, people who participate routinely in the culture of the group under study, to help develop and assess the measurement instruments.

When translating an instrument from English into another language, researchers should use the most effective translation methods, usually double translation (translate from English into the target language and then back into English by an independent person), to check for errors or inconsistencies.

After developing or translating measurement instruments for use with minority populations, researchers should test the instruments for validity and reliability on the population they intend to study.

The range of precision in measurement is quite broad—from the cook who measures in terms of pinches, dashes, and smidgens to the physicist who measures in angstroms (0.003937 millionths of an inch). No matter whether a measurement is crude or precise, it is important to recognize that all measurement involves some component of error (Alwin 2007). There is no such thing as an exact measurement. Some measurement devices in the social sciences are fairly precise. Others, however, contain substantial error components, because most of our measures deal with abstract and shifting phenomena, such as attitudes, values, or opinions, which are difficult to measure with a high degree of precision. The large error component in many of our measurements means that researchers must pay close attention to the different types and sources of error. In measurement, researchers confront two basic types of error: random and systematic. In fact, we can modify the formula from the measurement theory introduced earlier in this chapter with the recognition that the error term in that formula, E, is actually made up of two components:

E = R + S

where R refers to random error and S refers to systematic error. Now, our measurement formula looks like this:

X = T + R + S

Our measurement or observation of a phenomenon is a function of the true nature of that phenomenon along with any random and systematic error that occurs in the measurement process.