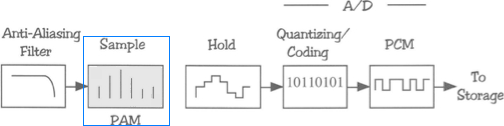

Discussion 8.1: Digital Specifications An audio system is set with the following specifications: 20-bit 48 kHz Please calculate the following: Signal-to-noise (signal-to-error) Frequency respons

Sampling Frequency

One important factor in defining the sonic accuracy of a digital audio recording or transmission system is its sampling frequency.

In order to properly represent the amplitude variations of any frequency, a digital recording system must be able to supply a minimum of two pulses (or amplitude captures) per cycle for any frequency. This translates to the following rule:

The sampling (or Nyquist) rate must be at least two times the highest frequency of the audio to be captured (the Nyquist frequency). So, in order to capture audio with frequency components up to 20 kHz, the sampling rate of the system must be at least 40 kHz. In practical terms, it actually needs to be a bit more than that to allow for the real world limitations of filters and other components; hence, the CD-spec sampling rate of 44.1 kHz for a theoretical frequency response up to 20 kHz.

For every added bit, we get and additional 2b possible amplitudes, yielding an additional 6 dB of dynamic range or signal-to-noise.This can be written as follows:

S/E = 20 log 2n

where S/E = the signal-to-noise or signal-to-error of the system, in dB; n = number of bits.

So why not just use infinite bit-depth to capture the signal's amplitudes faithfully? As with movies and images, it comes down to the practicality of storage space and transmission throughput or bandwidth. As data storage becomes cheaper and capacity increases, so does the bit-depth of our digital audio systems. What began as 12-bit audio in early digital sampling systems soon became 16-bit audio in early Pro Tools and other DAW systems, followed by 24-bit and then 32-bit floating-point digital recording systems.

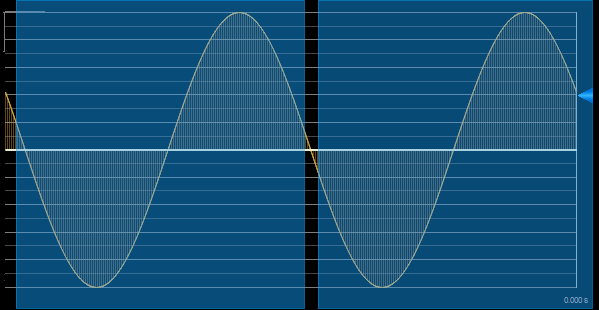

The inevitable question: if I have a 20 kHz wave and I only represent it with two pulses or samples ), how can it properly represent and reconstruct my original wave with the nuances in between the two points?

Well, what is the difference between a 20 kHz sine wave and a 20 kHz square wave? The square wave has added infinite odd harmonics (beginning at 60 kHz). If in the last step stage of my D/A process, I filter out everything above the Nyquist frequency, what am I left with? The 20 kHz sine wave, i.e. my original signal. Make sense? This is precisely the implication of the Nyquist theorem: as long as my sampling rate is at least twice my highest frequency, any amplitude nuance in my audio signal will be tracked properly (allowing for quantization error due to limited bit depth, of course).

Noise Immunity

One of the great advantages offered by digital audio is its relative immunity to interference from noise and other audio degradations. Once our signal is in digital form, the signal is a robust high-voltage, fully modulated PCM stream. Noise infiltration into that stream is virtually ignored unless it is so strong that it begins to confuse the system in its ability to distinguish a 1 pulse from a 0 pulse, an unlikely scenario. In contrast, an analog system and audio stream has no ability to distinguish between wanted signal and unwanted noise—both can contribute equally to the analog waveform's amplitude at any moment in time.

Recording, Transmission, and Storage

The need for storage space or transmission throughput will be determined directly by the frequency response and bit depth of the signal, as well as the number of simultaneous audio channels. Specifically, for each second of recording, the amount of data generated will equal: Bit depth x sampling frequency x number of channels. Thus, for a stereo CD-quality recording, one second of data will take up 16 (bits) x 44.1 kHz x 2 (channels) ≈ 1,411 kbits or 1.4 Mbits/s. With 8 bits in every byte (digital word), this is also equivalent to approximately 10 megabytes per minute of stereo recording (1.4 M ÷ 8 x 60).

Note: Actual data transmission rates will be higher due to the need for additional word identification bits, error detection/correction schemes, embedded word clock/timing data, etc.