paper

Sampling

The Purpose of Sampling

Sampling Terminology

Populations and Samples

Sampling Frames

A Classic Sampling Disaster

Probability Samples

Simple Random Sampling

Systematic Sampling

Stratified Sampling

Area Sampling

Estimating Sample Size

Nonprobability Samples

Availability Sampling

Snowball Sampling

Quota Sampling

Purposive Sampling

Dimensional Sampling

Sampling with Minority Populations

A Note on Sampling in Practice

Review and Critical Thinking

Main Points

Important Terms for Review

Critical Thinking

Exploring the Internet

For Further Reading

Exercises for Class Discussion

A number of correctional systems have established programs that use behavior modification techniques to shape inmate behavior by rewarding sought-after behavior and withholding privileges from those who are recalcitrant or hostile. Each inmate who is placed in such a program becomes, in a sense, a test of the hypotheses about behavior change derived from behavioral theory. What can we conclude, however, if one inmate’s behavior changes in a way that supports these hypotheses? Will the program work with other inmates?

This is the issue at the core of this chapter. Can knowledge gained from one or a few cases be considered knowledge about a whole group of people? The answer depends on whether the inmate is representative of some larger group of which the inmate is a “sample.” Does he or she represent all inmates? Only inmates in a particular prison? Just inmates who have committed certain offenses? Or is this inmate not representative of any larger group? These issues are at the center of the problem of sampling, or selecting a few cases out of some larger grouping for study. All of us have had experience with sampling. Cautiously tasting a spoonful of soup is a process of sampling to see how hot it is; taking a bite of a new brand of pizza is a process of sampling to see if we like it. All sampling involves attempting to make a judgment about a whole something—a bowl of soup, a brand of pizza, or an inmate population—based on an analysis of only a part of that whole. Scientific sampling, however, is considerably more careful and systematic than casual, everyday sampling. In this chapter, we discuss the fundamentals of sampling along with the benefits and disadvantages of various sampling techniques.

The Purpose of Sampling

When we first encounter the subject of sampling, a common question is this: Why bother? Why not just study the whole group? A major reason for studying samples rather than whole groups is that the whole group sometimes is so large that studying it is not feasible. For example, human service workers might want to learn about welfare recipients, the mentally ill, prison inmates, or some other large group of people. It would be difficult—and often impossible—to study all members of these groups. Sampling, however, allows us to study a workable number of cases from the large group to derive findings that are relevant to all members of the group.

A second reason for sampling is that, surprising as it may seem, we can get better information from carefully drawn samples than we can from an entire group. This is especially true when the group under study is extremely large. For example, the United States takes a census of all residents at the beginning of each decade. Despite the vast resources that the federal government puts into the census, substantial undercounts and other errors occur. In fact, after recent censuses, numerous cities filed lawsuits complaining of alleged undercounts. Between the decennial censuses, the U.S. Census Bureau conducts sample surveys to update population statistics and collect data on other matters. The quality of the data gathered in these sample surveys actually is superior to the data in the census itself. The reason is that, with only a few thousand people to contact, the task is more manageable, involving better-trained interviewers, greater control over the interviewers, and fewer hard-to-find respondents. In fact, the U.S. Census Bureau even conducts a sample survey after each census as a check on the accuracy of that census. Indeed, were it not a constitutional requirement, the complete census might well be dropped and replaced by sample surveys.

Much research, then, is based on samples of people. Samples make possible a glimpse of the behavior and attitudes of whole groups of people, and the validity and accuracy of research results depend heavily on how samples are drawn. An improperly drawn sample renders the collected data virtually useless. So, an important consideration regarding samples is how representativethey are of the population from which we draw them. A representative sample is one that accurately reflects the distribution of relevant variables in the target population. In a sense, the sample should be considered a small reproduction of the population. Imagine, for example, that a researcher wants to study the success of unmarried teenage mothers in raising their children, with the goal of improving the provision of services to these adolescents. The research sample should reflect the relevant characteristics of unmarried teenage mothers in the community. Such characteristics might include age, years of education, and socioeconomic status. To be representative, the sample would have to contain the same proportion of unmarried teenage mothers at each age level, each educational level, and each socioeconomic status that exists in the community as a whole. In short, a representative sample should have all the same characteristics as the population. The representative character of samples allows the conclusions that are based on them to be legitimately generalized to the populations from which they are drawn. As we will see later in this chapter, nonrepresentative samples are useful for some research purposes, but researchers must always assess the representativeness of their samples to make accurate conclusions. Before comparing the various techniques for drawing samples, we will define some of the major terms that are used in the field of sampling.

Sampling Terminology

Populations and Samples

A sample is drawn from a population, which refers to all possible cases of what we are interested in studying. In the human services, the target population often is people who have some particular characteristic in common, such as all Americans, all eligible voters, all school-age children, and so on. A population need not, however, be composed of people. Recall from Chapter 4that the unit of analysis can be something other than individuals, such as groups or programs. Then, the target population is all possible cases of our unit of analysis. A sample consists of one or more elements selected from a population. The manner in which we select elements for the sample has enormous implications for the scientific utility of the research based on that sample. To select a good sample, we need to clearly define the population from which to draw the sample. Failure to do so can make generalizing from the sample observations highly ambiguous and result in inaccurate conclusions.

The definition of a population should specify four things: (1) content, (2) units, (3) extent, and (4) time (Kish 1965, p. 7). Consider the sample that James Greenley and Richard Schoenherr (1981) used to study the effects of agency characteristics on the delivery of social services. First, the content of the population refers to the particular characteristic that the members of the population have in common. For Greenley and Schoenherr, the characteristic that was common to the members of their population was that they were health or social service agencies. Second, the unit indicates the unit of analysis, which in our illustration is organizations rather than individuals or groups. (Although Greenley and Schoenherr collected data from practitioners and clients in the organizations, their focus was on comparing the performance of agencies.) Third, the extent of the population refers to its spatial or geographic coverage. For practical reasons, Greenley and Schoenherr limited the extent of their population to health and social agencies serving one county in Wisconsin. It was not financially feasible for them to define the extent of their population as all agencies in Wisconsin—or in the United States. Finally, the time factor refers to the temporal period during which a unit must possess the appropriate characteristic to qualify for the sample. Greenley and Schoenherr conducted a cross-sectional study, and only agencies that were in operation at the time those authors collected their data qualified. A longitudinal study might include agencies that came into existence during the course of the study.

With these four factors clearly defined, a population normally is adequately delimited. Then, we can construct what is called a sampling frame.

Sampling Frames

A sampling frame is a listing of all the elements in a population. In many studies, we draw the actual sample from this listing. The adequacy of the sampling frame is crucial in determining the quality of the sample, and the degree to which the sampling frame includes all members of the population is of major importance. Although an endless number of possible sampling frames exist, a few illustrations will describe some of the intricacies of developing good sampling frames.

In human service research, some of the most adequate sampling frames consist of lists of members of organizations. For example, if we wanted to expand the study, mentioned at the beginning of this chapter, regarding the impact of behavior modification on inmates, we could draw a larger sample of inmates in that prison using a straightforward sampling frame consisting of all inmates currently listed as residents of that institution. Given the care with which correctional facilities maintain accurate records of inmates, this sampling frame undoubtedly would be complete and accurate. Other examples of sampling frames based on organizational affiliation would be the membership rosters of professional groups, such as the National Association of Social Workers (NASW), the American Psychological Association, or the American Society of Criminology. These lists are not quite as accurate as an inmate roster, because people who have very recently joined the organization might not appear on the official lists. Clerical errors also might lead to a few missing names. These errors, however, would have little effect on the adequacy of the sampling frame.

When using organizational lists as a sampling frame, we must exercise caution about what we define as the population and about whom we make generalizations. The population consists of the sampling frame, and we can make legitimate generalizations only about the sampling frame. Many social workers, for example, do not belong to the NASW. Thus, a sample taken from the NASW membership roster represents only NASW members and not all social workers. When using organizational lists as sampling frames, then, it is important to assess carefully who the list includes and who the list excludes. Sometimes, research focuses on a theoretical concept that is operationalized in terms of an organizational list that does not include all actual instances of what the concept intends. For example, a study of poverty could operationalize the concept “poor” as those receiving some form of public assistance. Yet, many people with little or no income do not receive public assistance. In this case, the sampling frame would not completely reflect the population that the theoretical concept intended.

Some research focuses on populations that are quite large, such as residents of a city or a state. To develop sampling frames for household-based surveys of these populations, five listings could be considered: (1) telephone numbers, (2) post office address listings, (3) listings developed by survey research organizations, (4) utility subscribers, or (5) city directories. A listing of telephone numbers in a geographic area can be considered a sampling frame of households, although there are a number of problems with such a listing. Even today, some people do not have telephone service (about 7 percent of households) (U.S. Census Bureau 2008). Those without telephones tend to be concentrated among the poor, living in rural areas, and transient groups, such as the young. For a research project in which these groups are important, sampling based on telephone numbers could be very unrepresentative. Another problem is the growth in use of cell phones, with as many as 8 to 12 percent of households today having cell-phone service only (Keeter et al. 2007). Cell phones are not as clearly tied to a particular household or geographic area as traditional landlines are. So, a person may live in a different geographic area from where the cell phone is registered. This makes it difficult to know what phone numbers are to be included in a sampling frame when selecting a random sample of households for a particular community. If cell phones are left out, it threatens the representativeness of the sample, because cell phone-only people tend to be younger, less affluent, and more transient. Telephone books do not provide a very good listing of telephone numbers because of the many unlisted numbers and cell phones that are not listed in phone books. Instead, if telephone numbers are to be used as a sampling frame, some random number selection technique, such as random-digit dialing (RDD), can ensure that every household with telephone service has a chance of appearing in the sample. Of course, RDD does nothing about non-coverage resulting from the lack of telephone service in some households or some of the problems created by cell phones.

Another population listing that can serve as a sampling frame for a particular geographic area is a listing provided by the U.S. Postal Service of all residential addresses that it serves in an area (O’Muircheartaigh, Eckman, and Weiss 2002). These listings can be purchased from direct mail marketers, but they also have problems of noncoverage: Households can request that their address not be sold by the post office, and many rural addresses don’t show up on the listing.

A third source of household listings are those developed by some survey research organizations by having trained people walk through a community, physically locate every housing unit, and note its address. This probably is the most complete listing of households in a community, but it is also expensive—either to purchase or to develop yourself.

Another household listing sometimes used for sampling is a list of customers from a local electric utility. Although some households do not have telephone service, relatively few lack electricity, and the problem of noncoverage therefore is less significant. A major problem, however, comes from multiple-family dwellings, which often list utilities only in the name of the building’s owner rather than in the names of all the individual residents. The young, the old, and the unmarried are more likely to inhabit these multiple-family dwellings. Unless we supplement the utility listings, samples will systematically underrepresent people in these groups. Visiting the dwellings and adding the residents to the list of utility subscribers can overcome this problem, but this is a time-consuming task.

Finally, city directories are quite useful as household listings. Available in most libraries, city directories contain, among other things, an alphabetical listing of streets and addresses with residents’ names. This listing is fairly accurate, but it does exclude new construction.

A Classic Sampling Disaster

Some disastrous mistakes have occurred during sampling in past investigations, often because of inadequate sampling frames. These mistakes result in special chagrin when the investigator makes some precise—and easily refutable—predictions based on the sample. A classic example of this was the attempt Literary Digest magazine made to predict the outcome of the 1936 presidential race between Alfred Landon and Franklin Roosevelt. When predicting an election, the target population is all likely voters. Literary Digest, however, did not use a sampling frame that listed all likely voters. Rather, they drew their sample from lists of automobile owners and from telephone directories. On the basis of their sample results, they predicted that Landon would win by a substantial margin, but of course, Roosevelt won the election easily. Why the error in prediction? This question continues to generate debate in the professional literature, but the evidence points toward two possible factors, each serious in itself but deadly in combination (Bryson 1976; Cahalan 1989; Squire 1988). The first problem was a flawed sampling frame. In 1936, with the Great Depression at its peak, a substantial proportion of eligible voters, especially poorer ones, did not own cars or have telephones. In short, the sample was drawn from an inadequate sampling frame and did not represent the target population. In addition, because the poor are more likely to vote Democratic, most of the eligible voters excluded from the sampling frame voted for the Democratic candidate, Roosevelt. The second problem in the Literary Digest poll was a poor response rate. Although it employed a massive sample size, the pollsters used a mailed survey, and the percentage of respondents who returned the surveys was very low (about 23 percent). An independent follow-up investigation in a city where half the voters voted for Roosevelt and half for Landon found that only 15 percent of the Roosevelt supporters returned their surveys but that 33 percent of the Landon supporters did (Cahalan 1989). So, if a bias existed in the sampling frame against Roosevelt supporters, the nonresponse bias compounded it: Landon supporters were much more likely to return their surveys to Literary Digest. The result was the magazine’s embarrassingly inaccurate prediction. Although Literary Digest was a popular and respected magazine before the election, it never recovered from its prediction and went out of business a short time later.

We can construct adequate sampling frames for many human service projects from existing listings, such as those already mentioned, which are already available or readily made. Still, we must exercise caution in using such lists, because they may inadvertently exclude some people. In fact, human service research is especially vulnerable to this, because we often study populations that are difficult to enumerate. For example, undocumented aliens are, by definition, not listed anywhere. We know they make up a large segment of the population in such urban centers as Los Angeles, but a study of the poor in these areas that relied on a city directory obviously would miss large numbers of such people. Early studies of gay men also fell prey to this problem (Bell and Weinberg 1978; Hooker 1957). In some of these studies, the sampling frame was homosexuals who were listed as patients by psychotherapists who participated in the research. The studies concluded that homosexuality was associated with personality disturbance. Yet, it does not take great insight to recognize that the sampling frames did not list many gay men—namely, those feeling no need to see therapists—and, thus, were strongly biased toward finding personality disorders among gays.

We must assess sampling frames carefully to ensure that they include all elements of the population of interest. The remainder of this chapter is a discussion of the different ways in which to select samples. First, we discuss probability samples, for which we are most likely to have a sampling frame from which to draw the sample. Researchers use probability samples in some types of human service research, such as needs assessment and evaluation research. Then, we discuss nonprobability samples, which researchers use in assessing client functioning and in evaluating the effectiveness of intervention strategies.

Probability Samples

With luck, almost any sampling procedure could produce a representative sample, but that is little comfort to the researcher who wants to be as certain as possible that his or her sample is representative. Techniques that make use of probability theory can both greatly reduce the chances of getting a nonrepresentative sample and, more importantly, permit the researcher to estimate precisely the likelihood that a sample differs from the true population by a given amount. In these samples, known as probability samples, each element in the population has some chance of inclusion in the sample, and the investigator can determine the chances or probability of each element’s inclusion (Scheaffer, Mendenhall, and Ott 2006). In their simpler versions, probability sampling techniques ensure that each element has an equal chance of inclusion. In more elaborate versions, the researcher takes advantage of knowledge about the population to select elements with differing probabilities. The key point is that, whether the probabilities are equal or different, each element’s probability of inclusion in a probability sample is nonzero and known. Furthermore, probability sampling enables us to calculate sampling error, which is an estimate of the extent to which the values of the sample differ from those of the population from which it was drawn.

Simple Random Sampling

The simplest technique for drawing probability samples is simple random sampling (SRS), in which each element in the population has an equal probability of inclusion in the sample. Simple random sampling treats the target population as a unitary whole. We might begin with a sampling frame containing a list of the entire population—or as complete a list as we can obtain. We would then number the elements in the sampling frame sequentially and select elements from the list using a procedure known to be random. If we computerized the sampling frame, we could accomplish random selection merely by programming the computer to select randomly a sample of whatever size we desired. (Appendix B describes how to generate random samples both with the computer and by hand, using a table of random numbers.)

Although simple random samples have the desirable feature of giving each element in the sampling frame an equal chance of appearing in the sample, SRS often is impractical. A major reason for this is cost. Imagine doing a research project that calls for a national sample of 2,000 households. Even if one could obtain such a sample using SRS, which is unlikely, it would be prohibitively expensive to send interviewers all over the country to obtain the data. Furthermore, alternatives to SRS might be more efficient in terms of providing a high degree of representativeness with a smaller sample. Normally, SRS is limited to fairly small-scale projects that deal with populations of modest size for which we can obtain adequate sampling frames. The importance of SRS lies not in its wide application. Rather, SRS is the basic sampling procedure on which statistical theory is based, and it is the standard against which other sampling procedures are measured.

Systematic Sampling

A variation on SRS is called systematic sampling, which involves taking every kth element listed in a sampling frame. Systematic sampling uses the table of random numbers to determine a random starting point in the sampling frame. From that random start, we select every k th element into the sample. The value of k is called the sampling interval, and it is determined by dividing the population size by the desired sample size. For example, if we wanted a sample of 100 from a population of 1,000, then the sampling interval would be 10. From the random starting point, we would select every 10th element from the sampling frame for the sample. If the starting point is in the middle of the list, then we proceed to the end, jump to the beginning, and end up at the middle again.

In actual practice, dividing the population by the sample size usually does not produce a whole number, so the decimal is rounded upward to the next-largest whole number. This provides a sampling interval that takes us completely through the sampling frame. If we round downward, then the sampling interval becomes slightly too narrow, and we reach the desired sample size before we exhaust the sampling frame, which would mean that those elements farthest from the starting point have no chance of selection.

We commonly use systematic sampling when we draw samples by hand rather than by computer. The only advantage of systematic sampling over SRS is in clerical efficiency. In SRS, the random numbers will select elements scattered throughout the sampling frame. It is time-consuming to search all over the sampling frame to identify the elements that correspond with the random numbers. In systematic sampling, we proceed in an orderly fashion through the sampling frame from the random starting point.

Unfortunately, systematic sampling can produce biased samples, although this is rare. The difficulty occurs when the sampling frame consists of a population list that has a cyclical or recurring pattern, called periodicity. If the sampling interval happens to be the same as that of the cycle in the list, then it is possible to draw a seriously biased sample. For example, suppose we were sampling households in a large apartment building. The apartments are listed in the sampling frame by floor and apartment number (2A, 2B, 2C, 2D, 2E, 2F, 3A, 3B, and so on). Furthermore, suppose that, on each floor, apartment F is a corner apartment with an extra bedroom and correspondingly higher rent than the other apartments on that floor. If we had a sampling interval of three and randomly chose to begin counting with apartment 2D, then every F apartment would appear in the sample, which would bias the sample in favor of the more expensive apartments and, thus, in favor of the more affluent residents of the apartment building. So, when we use systematic sampling techniques, we need to assess the sampling frame carefully for any cyclical pattern that might confound the sample and, if necessary, rearrange the list to eliminate the pattern. Or, we could use SRS instead of systematic sampling.

Stratified Sampling

With SRS and systematic sampling methods, we treat the target population as a unitary whole when sampling from it. Stratified sampling changes this by dividing the population into smaller subgroups, called strata, before drawing the sample and then drawing separate random samples from each of the strata.

Reduction in Sampling Error

One of the major reasons for using a stratified sample is that stratifying reduces sampling error for a given sample size to a level lower than that of an SRS of the same size. This is so because of a very simple principle: The more homogeneous a population on the variables under study, the smaller the sample size needed to represent it accurately. Stratifying makes each sub-sample more homogeneous by eliminating the variation on the variable used for stratifying. Perhaps a gastronomic example will help illustrate this point. Imagine two large, commercial-size cans of nuts, one labeled “peanuts” and the other labeled “mixed nuts.” Because the can of peanuts is highly homogeneous, only a small handful from it gives a fairly accurate indication of the remainder of its contents. The can of mixed nuts, however, is quite heterogeneous, containing several kinds of nuts in different proportions. A small handful of nuts from the top of the can cannot be relied on to represent the contents of the entire can. If the mixed nuts were stratified by type into homogeneous piles, however, then a few nuts from each pile could constitute a representative sample of the entire can.

Although stratifying does reduce sampling error, it is important to recognize that the effects are modest. We expect approximately 10 to 20 percent (or less) reduction in comparison to an SRS of equal size (Henry 1990; Sudman 1976). Essentially, the decision to stratify depends on two issues: the difficulty of stratifying, and the cost of each additional element in the sample. It can be difficult to stratify a sample on a particular variable if it is hard to get access to data on that variable. For example, we would find it relatively easy to stratify a sample of university students according to class level, because universities typically include class status as part of a database of all registered students. In contrast, we would find it difficult to stratify that same sample on the basis of whether the students had been victims of sexual abuse during childhood, because these data are not readily available and getting them would require a major study in itself. So, stratification requires either that the sampling frame include information on the stratification variable or that the stratification variable is easily determined. Telephone surveys and stratification of respondents by gender illustrate the latter situation. Telephone interviewers can simply ask to speak to the man of the house to obtain the male stratum and request to speak to the woman of the house for the female stratum. If no one of the desired gender is available, then the interviewer drops that household and substitutes another. The process may require some extra phone calls, but the time and cost of doing this can pay for itself in the quality of the sample. As for the effect of cost issues on the decision of whether to stratify, if the cost of obtaining data on each case is high, as in an interview survey, then stratifying to minimize sample size probably is warranted. If each case is inexpensive, however, then stratifying to reduce cost may not be worth the effort unless it can be easily accomplished.

Proportionate Sampling

When we use stratification to reduce sampling error, we normally use proportionate stratified sampling, in which the size of the sample taken from each stratum is proportionate to the stratum’s presence in the population. Consider a sample of the undergraduates at a college or university. Although the students differ on many characteristics, an obvious difference is their class standing. Any representative sample of the student body should reflect the relative proportions of the various classes as they exist at the college as a whole. If we drew an SRS, then the sample size would have to be quite large for the sample to reflect accurately the distribution of class levels. Small samples would have a greater likelihood of being disproportionate. If we stratify on class level, however, then we can easily make the sample match the actual class distribution, regardless of the sample size. Table 6.1 contains the hypothetical class distribution of a university student body. If a researcher wanted a sample of 200 students with these proportions of students accurately represented, stratifying could easily accomplish it. The researcher would begin by developing a sampling frame with the students grouped according to class level, then would draw a separate SRS from each of the four class strata in numbers proportionate to their presence in the population: 70 freshmen, 50 sophomores, 40 juniors, and 40 seniors.

Table 6.1 Hypothetical Proportionate Stratified Sample of University Students

| Proportion in University |

| Stratified Sample of 200 |

|

| Seniors | 20% | Seniors | 40 |

| Juniors | 20% | Juniors | 40 |

| Sophomores | 25% | Sophomores | 50 |

| Freshmen | 35% | Freshmen | 70 |

|

| 100% |

| 200 |

In actual practice, it is normal to stratify on more than one variable. In the case of a student population, for example, the researcher might want to stratify on sex as well as class level. That would double the number of separate subsamples from four to eight: senior men, senior women, junior men, and so on. Even though stratifying on appropriate variables always improves a sample, researchers should use stratification judiciously. Stratifying on a few variables provides nearly as much benefit as stratifying on many. Because the number of subsamples increases geometrically as the number of stratified variables and the number of categories increase, attempting to stratify on too many variables can excessively complicate sampling without offering substantially increased benefits in terms of a reduction in sampling error.

Disproportionate Sampling

In addition to reducing error, we use stratified samples to enable comparisons among various subgroups in a population when one or more of the subgroups is relatively uncommon. For example, suppose we were interested in comparing two-parent families receiving welfare with other families receiving welfare. If two-parent families make up only about 2 percent of families on the welfare rolls, then a large SRS of 500 welfare families would be expected to contain only 10 such families. This number is far too small to make meaningful statistical comparisons. Stratifying in this case allows us to draw a larger sample of two-parent families to provide enough cases for reliable comparisons to be made. This is called disproportionate stratified sampling, because we do not sample the strata proportionately to their presence in the population. This type of sample is different from most probability samples, where we achieve representativeness by giving every element in the population an equal chance of appearing in the sample. With a disproportionate stratified sample, each element of a stratum has an equal chance of appearing in the sample of that stratum, but the elements in some strata have a better chance of appearing in the overall sample than the elements of other strata do.

Selection of variables on which to stratify depends on the reason for stratifying. If we were stratifying to ensure sufficient numbers of cases for analysis in all groups of interest, as in the example of two-parent welfare families, then we would stratify on the variable that has a category with a small proportion of cases in it. This often is an independent variable and involves disproportionate stratified sampling. On the other hand, if the goal of stratifying is to reduce sampling error, as is the case in proportionate stratified sampling, then we might use variables other than the independent variable. Stratifying has an effect in reducing sampling error only when the stratification variables relate to the dependent variables under study. So, we should select variables that we know or suspect of having an impact on the dependent variables. For example, a study concerning the impact of religiosity on delinquency might stratify on socioeconomic status, because studies have shown that this variable is related to delinquency involvement. Stratifying on a frivolous variable, such as eye color, would gain us nothing, because it is unlikely to relate to delinquency involvement. It is worth noting, however, that stratifying never hurts a sample. The worst that can happen is that the stratified sample will have about the same sampling error as an SRS of equivalent size, and that the stratifying efforts will have gone for naught. Research in Practice 6.1 illustrates a fairly complex use of a stratified sample.

Area Sampling

Area sampling (also called cluster sampling or multistage sampling) is a procedure in which we obtain the final units to include in the sample by first sampling among larger units, called clusters, that contain the smaller sampling units. A series of sampling stages are involved, working down in scale from larger clusters to smaller ones.

Research in Practice 6.1: Program Evaluation: Sampling for Direct Observation of Seat Belt Use

To encourage people to use seat belts, many states have passed legislation requiring the use of seat belts by drivers and passengers. Do these laws work? Do people wear their seat belts in states that require them? Trying to answer this question through the use of a survey probably would not produce very accurate results. Many people are likely to give the socially desirable response and claim on a survey that they use their seat belts when they actually don’t. Faced with the undesirability of a survey, researchers at the University of Michigan Transportation Research Institute turned to direct observation of drivers and passengers to accurately determine levels of seat belt use (Wagenaar and Wiviott 1986). Direct observation, however, had its own problems. Early on, the researchers determined that to reliably code the desired information about each vehicle, the vehicle had to be stopped at least briefly. This requirement greatly affected both the sampling and the observation procedures.

Because the main purpose of the study was to estimate seat belt use rates for the state of Michigan, a representative sample was crucial. Given the requirements for observation, the researchers needed a representative sample of places where vehicles temporarily stopped. They solved this unique sampling problem by selecting a sample of intersections with automatic traffic signals. The signals held the traffic long enough for accurate observations and in places with sufficient traffic to keep the observers efficiently busy. Specifically, using a multistaged stratified probability sampling procedure, the researchers selected 240 intersections. First, they identified all counties in Michigan with at least three intersections controlled by electronic signals. They also discovered that 20 of Michigan’s 83 counties did not meet this criterion, so those were grouped with adjacent counties to form 63 counties or county groups.

The 63 areas were then grouped into seven regions, which became strata for a stratified sample, with a separate sample drawn from each region. Given the great differences in population density, from high in the southeastern part of the state to very low in the northern and northwestern parts, the researchers drew a disproportionate sample to ensure some inclusion of the low-population-density areas. This was important, because they hypothesized that population density could relate to seat belt use rates. The counties and county groups in the seven regions constituted the primary sampling units (PSUs). The researchers selected 60 PSUs by using a weighting system such that the most populous counties (or county groups) had the highest probability of inclusion in the sample. In some cases, large population resulted in multiple PSUs being selected from the same county. For example Wayne County, which includes the city of Detroit, had 13 PSUs selected. This step narrowed the sample down to 32 counties or county groups that were representative of the state population.

For the next stage, the researchers selected a complete list of all intersections equipped with electronic signals in the selected counties and county groups to serve as a sampling frame. From this sampling frame, they randomly selected the final sample of intersections, for a total of 240 observation sites.

The study concluded that the legislation had the desired effect. The proportion of people using seat belts tripled after the legislation was passed. The effect did wear off somewhat over time, but a few years after the laws were passed, seat belt usage was still well over twice as high as it was before the legislation.

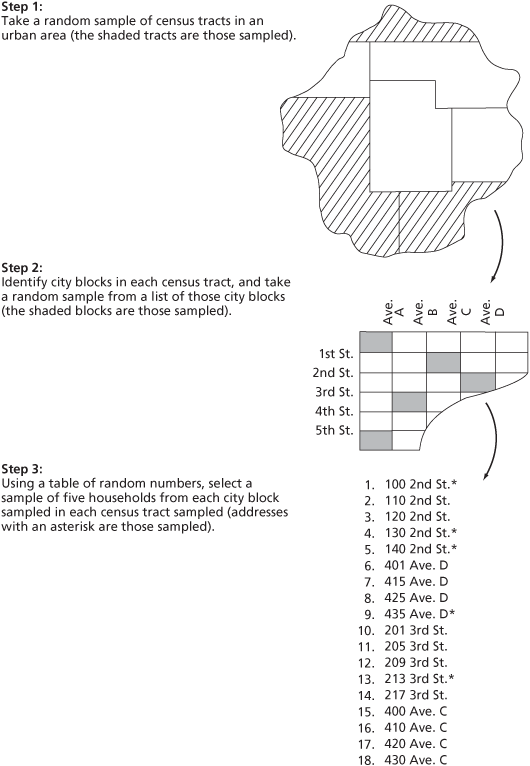

For example, imagine that we wanted to conduct a needs assessment survey that would determine the extent and distribution of preschool children with educational deficiencies in a large urban area. Simple random and systematic samples are out of the question, because no sampling frame listing all such children exists. We could, however, turn to area sampling, a technique that enables us to draw a probability sample without having a complete list of all elements in the population. The ultimate unit of analysis in this needs assessment would be households, because children live in households and we can create a sampling frame of households. We get there in the following way (see Figure 6.1). First, we take a simple random sample from among all census tracts in the urban area. (The U.S. Census Bureau divides urban areas into a number of census tracts, which are areas of approximately 4,000 people.) At the second stage, we list all the city blocks in each census tract in our sample, and then we select a simple random sample from among those city blocks. In the final stage, we list the households on each city block in our sample, and then we select a simple random sample of households on that list. With this procedure, we have an “area probability sample” of households in that urban area. (Public opinion polling agencies, such as Roper, typically use area sampling or a variant of it.) Finally, we interview each household in the sample regarding educational deficiencies among children in that household. If we were sampling an entire state or the whole country, then we would include even more stages of sampling, starting with even larger areas, but eventually working down to the household or individual level, whichever is our unit of analysis.

Figure 6.1 Drawing an Area Probability Sample

A number of factors can complicate area sampling. For example, selected blocks within an area often contain vastly different numbers of people—from high-density inner-city areas to the lower-density suburbs. We must adjust the number of blocks and the number of households per block that are selected into the sample to take into account the differing population densities. Another complication involves the estimation of sampling error. Fairly straightforward formulas for estimating sampling error exist for the simpler sampling techniques. With area sampling, however, the many stages of sampling involved make error estimation exceedingly complex (Kish 1965; Scheaffer, Mendenhall, and Ott 2006).

Error estimation is quite important for area samples. These samples are subject to greater error than other probability samples, because some error is introduced at each stage of sampling: The more stages involved, the more the sampling error accumulates. Other factors affecting sampling error are the size of the areas initially selected and their degree of homogeneity: The larger the initial areas and the greater their homogeneity, the greater the sampling error. This may seem odd, because with stratified sampling, greater homogeneity leads to less error. Remember, however, that with stratified sampling, we select a sample fromeach stratum, but with area sampling, we draw samples only from a few areas. If the few areas in a sample are homogeneous in comparison with the others, then they are less representative. Small, more numerous, heterogeneous clusters lead to more representative area samples. Despite the complexity, area sampling allows us to draw highly accurate probability samples from populations that, because of their size or geographical spread, we could not otherwise sample.

Estimating Sample Size

As we have seen, a key issue in selecting a sample is that it represent the population from which it was drawn. People sometimes assume that a larger sample is more representative than a smaller one and, thus, that one should go for the largest sample possible. Actually, however, deciding on an appropriate sample size is far more complicated. Five factors influence the sample size: (1) research hypotheses, (2) precision, (3) population homogeneity, (4) sampling fraction, and (5) sampling technique.

Research Hypotheses

One concern in establishing the desired sample size is that we have a sufficient number of cases with which to examine our research hypotheses. Consider a hypothetical study with three variables containing three values each. For an adequate test of the hypotheses, we need a cross tabulation of these three variables, and this would require a 3 × 3 × 3 table, or a table with 27 cells. If our sample were small, then many cells would have few or no cases in them, and we could not test the hypotheses. Johann Galtung (1967, p. 60) has suggested that 10 to 20 cases in each cell provides an adequate test of hypotheses. We might use disproportionate stratified sampling here to ensure an adequate number of cases in each cell. When that is not possible, Galtung suggests the following formula to determine sample size:

rn × 20 = sample size

where r refers to the number of values on each variable and n refers to the number of variables. Thus, for our hypothetical study,

rn × 20 = 33 × 20 = 27 × 20 = 540

So, we would need a sample size of 540 to feel reasonably assured that we had a sufficient number of cases in each cell. The formula works only if, as in our example, all variables have the same number of values. Furthermore, this technique does not guarantee an adequate number of cases in each cell. If some combination of variables is rare in the population, we may still find few cases in our sample.

Researchers often use statistical procedures in testing hypotheses, and most such procedures require some minimum number of cases to provide accurate results. What is the smallest legitimate sample size? This depends, of course, on the number of variables and on the values they can take. Generally, however, 30 cases is considered to be the bare minimum, and some researchers conservatively set 100 as the smallest legitimate sample size (Bailey 1987; Champion 1981). Anything smaller begins to raise questions about whether researchers can properly apply statistical procedures.

Precision

Another factor influencing sample size is the level of precision, or the amount of sampling error, that a researcher is willing to accept. Recall that sampling error refers to the difference between a sample value of some variable and the population value of the same variable. Suppose that the average age of all teenagers in a city is 15.4 years. If we draw a sample of 200 teenagers and calculate an average age of 15.1 years, then our sample statistic is close to the population value, but there is an error of 0.3 years. Remember, however, that the ultimate reason for collecting data from samples is to draw conclusions regarding the population from which we drew those samples. We have data from a sample, such as the average age of a group of teenagers, but we do not have those same data for the population as a whole. If we did, we would not need to study the sample, because we already would know what we want to know about the population.

If we do not know what the population value is, how can we assess the difference between our sample value and the population value? We do it in terms of the likelihood, or probability, that our sample value differs by a certain amount from the population value. (Probability theory is discussed in more detail in Chapter 15.) So, we establish a confidence interval, or a range in which we are fairly certain the population value lies. If we draw a sample with a mean age of 15.1 years and establish a confidence interval of ± (plus or minus) 1.2 years, then we are fairly certain that the mean age in the population is between 13.9 and 16.3 years of age. Probability theory also enables us to be precise about how certain we are. For example, we might be 95 percent certain, which is called the confidence level. (The computation of confidence intervals and confidence levels is beyond the intent of this book.) Technically, this means that if we draw a large number of random samples from our population and compute a mean age for each of those samples, then 95 percent of those means would have confidence intervals that include the population mean and 5 percent would not. What is the actual population mean? We don’t know, because we have not collected data from the whole population. We have data from only one sample, but we can conclude that we are 95 percent sure that the population mean lies within the confidence interval of that sample.

Precision is directly related to sample size: Larger samples are more precise than smaller ones. Thus, probability theory enables us to calculate the sample size that is required to achieve a given level of precision. Table 6.2 does this for simple random samples taken from populations of various sizes. As an example of how to read the table, a sample size of 1,023 would give you a 95 percent chance to obtain a sampling error of 3 percent or less with a population of 25,000 elements and a relatively heterogeneous population (a 50/50 split identifies a heterogeneous population, and an 80/20 split is a homogeneous population). In other words, a 95 percent chance exists that the population value is within 3 percent of the sample estimate. Again, to be technical, it means that if we draw many random samples and determine a confidence interval of 3 percent for each, then 95 percent of those confidence intervals will include the population value. Table 6.2 shows that sample size must increase when, other things being equal, the researcher wants less sampling error—that is, more precision—or the population size is larger or the population is more heterogeneous.

Table 6.2 Calculating Sample Size Based on Confidence Level, Sampling Error, Population Heterogeneity, and Population Size

|

| Sample Size for 95% Confidence Level | |||||

|

| ± 3% Sampling Error | ± 5% Sampling Error | ± 10% Sampling Error | |||

| Population Size | 50/50 Split | 80/20 Split | 50/50 Split | 80/20 Split | 50/50 Split | 80/20 Split |

| 100 | 92 | 87 | 80 | 71 | 49 | 38 |

| 250 | 203 | 183 | 152 | 124 | 70 | 49 |

| 500 | 341 | 289 | 217 | 165 | 81 | 55 |

| 750 | 441 | 358 | 254 | 185 | 85 | 57 |

| 1,000 | 516 | 406 | 278 | 198 | 88 | 58 |

| 2,500 | 748 | 537 | 333 | 224 | 93 | 60 |

| 5,000 | 880 | 601 | 357 | 234 | 94 | 61 |

| 10,000 | 964 | 638 | 370 | 240 | 95 | 61 |

| 25,000 | 1,023 | 665 | 378 | 243 | 96 | 61 |

| 50,000 | 1,045 | 674 | 381 | 245 | 96 | 61 |

| 100,000 | 1,056 | 678 | 383 | 245 | 96 | 61 |

| 1,000,000 | 1,066 | 682 | 384 | 246 | 96 | 61 |

| 100,000,000 | 1,067 | 683 | 384 | 246 | 96 | 61 |

| How to read this table: For a population with 250 members whom we expect to be about evenly split on the characteristics in which we are interested, we need a sample of 152 to make estimates with a sampling error of no more than ± 5%, at the 95% confidence level. A “50/50 split” means the population is relatively varied. An “80/20 split” means it is less varied; most people have a certain characteristic, a few do not. Unless we know the split ahead of time, it is best to be conservative and use 50/50. | ||||||

| Numbers in the table refer to completed, usable questionnaires needed for various levels of sampling error. Starting sample size should allow for ineligibles and nonrespondents. Note that when the population is small, little is gained by sampling, especially if the need for precision is great. | ||||||

SOURCE: From How to Conduct Your Own Survey by Priscilla Salant and Don A. Dillman, p. 55. Copyright © 1994 by John Wiley & Sons, Inc. Reproduced with permission of John Wiley & Sons, Inc.

In actuality, of course, we draw only one sample; probability theory tells us the chance we run of a single sample’s having a given level of error. There is a chance—5 times out of 100—that the sample will have an error level greater than 3 percent. In fact, there is a chance, albeit a minuscule one, that the sample will have a large error level. This is a part of the nature of sampling: Because we are selecting a segment of a population, there is always a chance that the sample will be unrepresentative of that population. The goal of good sampling techniques is to reduce the likelihood of that error. (Furthermore, one goal of replication in science, as discussed in Chapter 2, is to protect against the possibility that a researcher has unknowingly based the findings of a single study on a sample that contains a large error.)

If the 95 percent confidence level is not satisfactory for our purposes, then we can raise the odds to the 99 percent level by increasing the sample size. (This is not shown in Table 6.2.) In this case, only 1 out of 100 samples is likely to have an error level greater than 3 percent. A sample size large enough for this confidence level, however, might be expensive and time-consuming. For this reason, professional pollsters normally are satisfied with a sample size that enables them to achieve an error level in the range of 2 to 4 percent. Likewise, most scientific researchers are forced to accept higher levels of error—often as much as 5 to 6 percent with a 95 percent confidence level. At the other end of the spectrum, exploratory studies can provide useful data even though they incorporate considerably more imprecision and sampling error. So, the issue of sample size and error is influenced, in part, by the goals of the research project.

Population Homogeneity

The third factor affecting sample size is the variability of the sampled population. As we have noted, a large sample is more essential for a heterogeneous population than for a homogeneous one. Unfortunately, researchers may know little about the homogeneity of their target population and can make accurate estimates of population variability only after they draw the sample, collect the data, and at least partially analyze that data. On the surface, this would appear to preclude estimating sample size in advance. In fact, however, probability theory allows sample size to be estimated by simply assuming maximum variability in the population. In Table 6.2, the assumption of “50/50 split” means that we assume maximum variability. Such estimates are, of course, conservative: This means that the sample size estimates are larger than needed for a given level of precision if the actual variability in the population is less than assumed.

Sampling Fraction

A fourth factor influencing sample size is the sampling fraction, or the number of elements in the sample relative to the number of elements in the population (or n/N, where n is the estimated sample size ignoring sampling fraction and N is the population size). With large populations, we can ignore the sampling fraction, because the sample constitutes only a tiny fraction of the population. In Table 6.2, for example, a population of 10,000 calls for a sample size of only 370 (5 percent sampling error and 50/50 split), which is less than 4 percent of the population. For such samples, the research hypotheses, sampling error, and population homogeneity are sufficient to determine the sample size. With smaller populations, however, a sample that meets these criteria may constitute a relatively large fraction of the whole population and, in fact, may be larger than it needs to be (Moser and Kalton 1972). This is so because a sample that constitutes a large fraction of a population contains less sampling error than if the sample were a small fraction. In such cases, the sample size can be adjusted by the following formula:

Where:

n′ = adjusted sample size,

n = estimated sample size ignoring the sampling fraction

N = population size.

As a rule of thumb, this correction formula should be used if the sampling fraction is more than 5 percent. For example, suppose that a community action agency is conducting a needs assessment survey for a Native-American tribal organization with 3,000 members. On the basis of the research hypothesis, sampling error, and population variance on key variables, it is estimated that a sample size of 600 is needed. The sampling fraction, then, is n/N = 600/3,000 = 0.2, or 20 percent. Because this is well over 5 percent, we apply the correction:

| n′ | = | 600/[1 + (600/3, 000)] |

| n′ | = | 600/1.20 |

| n′ | = | 500 |

Thus, instead of a sample of 600, we need only 500 to achieve the same level of precision. At costs that often exceed $50 per interview, the savings of this adjustment often are significant.

Sampling Technique

The final factor influencing sample size is the sampling technique employed. The estimates discussed thus far are for simple random samples. More complex sampling procedures change the estimates of sample size. Area sampling, for example, tends to increase sampling error in comparison with SRS. We can obtain a rough estimate of sample sizes for area samples by simply increasing the suggested sizes in Table 6.2 by one half (Backstrom and Hursh-Cesar 1981). This estimate is crude and, probably, conservative, but it is simple to obtain. Stratified sampling, on the other hand, tends to reduce sampling error and to decrease the required sample size. Estimating sample sizes for stratified samples is relatively complex (Kish 1965; Scheaffer, Mendenhall, and Ott 2006).

In an assessment of the implications of scientific work for clinical application, the issue of precision in sampling comes to the fore. Practitioners need to exercise judgment regarding how scientifically sound the research is and whether it is sufficiently valid to introduce into practice. As we have emphasized, practitioners should view single studies with caution—irrespective of how low the sampling error is. As numerous studies begin to accumulate, we must then assess them in terms of how much error we can expect, given the sample size and the sampling technique. If the sampling errors appear to be quite low, then a few replications might confirm that the findings from these samples reflect the state of the actual population. With large sampling errors, however, the probability increases that the samples do not represent the population. In such cases, confidence in the outcomes can result only if a number of studies arrive at the same conclusions. More studies mean the drawing of more samples, which in turn reduces the likelihood that all the samples contain large sampling errors.

Nonprobability Samples

Probability samples are not required—or even appropriate—for all studies. Some research situations call for nonprobability samples, in which the investigator does not know the probability of each population element’s inclusion in the sample. Non-probability samples have some important uses (O’Connell 2000). First, they are especially useful when the goal of research is to see whether a relationship exists between independent and dependent variables, with no intent to generalize the results beyond the sample to a larger population. This sometimes is the case, for instance, in experimental research, where future research in other settings will establish generalizability (see Chapter 10).

A second situation where nonprobability samples are useful is in some qualitative research, where the goal is to understand the social process and meaning structure of a particular setting or group (Maxwell 2005). In such qualitative research, the research goal often is only to develop an understanding of one particular setting or group of people; issues of generalizing to other settings are either irrelevant or an issue for future research projects. As we will see in Chapters 9 and 16, some qualitative researchers see probability samples as inappropriate for, or at best irrelevant to, theoretically sound qualitative research.

A third situation in which nonprobability samples are useful is when it is impossible to develop a sampling frame of a population. With no complete list of all elements in a population, researchers cannot ensure that every element has a chance to appear in the sample. These populations sometimes are called “hidden populations,” because at least some of their elements are hidden and either difficult or impossible to locate. In fact, the members of hidden populations sometimes try to hide themselves from detection by researchers and others, because they engage in illegal or stigmatized behavior, such as drug use or criminal activity. Rather than giving up on the study of such populations, however, researchers use nonprobability samples.

Although nonprobability samples can be useful, they do have some important limitations. First, without the use of probability in the selection of elements for the sample, we can make no real claim of representativeness. There is simply no way of knowing precisely what population, if any, a nonprobability sample represents. This question of representativeness greatly limits the ability to generalize findings beyond the level of the sample cases.

A second limitation is that the degree of sampling error remains unknown—and unknowable. With no clear population represented by the sample, we have nothing with which to compare it. The lack of probability in the selection of cases means that the techniques employed for estimating sampling error with probability samples are not appropriate. It also means that the techniques for estimating sample size are not applicable to non-probability samples. Of the five criteria used in considering sample size among probability samples, the only one that comes into play for nonprobability samples is the first—namely, that researchers select sufficient cases to allow the planned types of data analysis. Even population homogeneity and the sampling fraction do not come into play, because the researcher does not know the exact size or composition of the population.

A final limitation of nonprobability samples involves statistical tests of significance. These commonly used statistics, which we will discuss in Chapter 15, indicate to the researcher whether relationships found in sample data are sufficiently strong to generalize to the whole population. Some of these statistical tests, however, are based on various laws of probability and assume a random process for selecting sample elements. Because nonprobability samples violate some basic assumptions of these tests, researchers should use these statistical tests with caution on data derived from such samples.

Availability Sampling

Availability sampling (also called convenience sampling or accidental sampling) involves the researcher’s taking whichever elements are readily available. These samples are especially popular—and appropriate—for research in which it is either difficult or impossible to develop a complete sampling frame. Sometimes it is too costly to do so; in other cases, it is impossible to identify all the elements of a population. Helen Mendes (1976), for example, used an availability sample in her study of single fathers. Because it was practically impossible to develop a sampling frame of all single fathers, she turned to teachers, physicians, social workers, and self-help groups for assistance. She asked these people to refer single fathers to her. The limitation on generalizability, however, seriously reduces the utility of findings based on availability samples. It is impossible for Mendes to argue, for example, that the single fathers she studied were representative of all single fathers. It may well be that only fathers with certain characteristics were likely to become a part of her sample.

Availability samples often are used in experimental or quasi-experimental research. This is because it often is difficult to get a representative sample of people to participate in an experiment—especially one that is lengthy and time-consuming. For example, Ronald Feldman and Timothy Caplinger (1977) were interested in factors that bring about behavior changes in young boys who exhibit highly visible antisocial behavior. Their research design was a field experiment calling for the children participating in the study to meet periodically in groups over an eight-month period. Groups met an average of 22.2 times for two to three hours each time. Most youngsters could be expected to refuse such a commitment of time and energy. Had the investigators attempted to draw a probability sample from the community, they probably would have had such a high refusal rate as to make the representativeness of their sample questionable. They would have expended considerable resources and still had, in effect, a nonprobability sample. So, they resorted to an availability sample. To locate boys who had exhibited antisocial behavior, they sought referrals from numerous sources: mental health centers, juvenile courts, and the like. For a comparison group of boys who were not identified as antisocial, they sought volunteers from a large community center association. Given the purpose of experimentation, representative samples are less important. Experiments serve to determine if we can find cause-and-effect relationships; the issue of how generalizable those relationships are becomes important only after the relationships have been established.

Availability sampling probably is one of the more common forms of sampling used in human service research, both because it is less expensive than many other methods and because it often is impossible to develop an exhaustive sampling frame. For example, in the 20 issues of the journal Social Work Research published from 1994 through 1999, at least 52 of the 88 research articles could be classified as reporting some form of availability sample. You can readily grasp the problems of trying to develop a sampling frame in the following studies:

Turning points in the lives of young inner-city men previously involved in violence, illegal drug marketing, and other crimes and now contributing to their community’s well-being: The sample consisted of 20 young men, most of whom were referred by intervention programs, pastors, and community leaders.

A study on methods for preventing HIV/AIDS transmission in drug-abusing, incarcerated women: The sample consisted of inmates at Rikers Island who were recruited with posted notices and staff referrals.

A study on effectiveness of a program to reduce stress, perceived stigma, anxiety, and depression for family members of people with AIDS: Participants were recruited from an AIDS service program.

Depression and resilience in elderly people with hip fractures: The sample consisted of 272 patients over age 65 who were hospitalized following hip fractures.

An exhaustive sampling frame that would make possible the selection of a probability sample in each of these studies might be, respectively, as follows:

All reformed/rehabilitated young adult offenders.

All drug-abusing, incarcerated women.

All family members of people infected with AIDS.

All elderly people with hip fractures.

Clearly, such probability sampling is beyond the realm of most investigators. Availability samples, though less desirable, make it possible for scientific investigation to move forward in those cases when probability sampling is impossible or prohibitively expensive.

Snowball Sampling

When a snowball is rolled along in wet, sticky snow, it picks up more snow and gets larger. This is analogous to what happens with snowball sampling: We start with a few cases of the type we want to study, and we let them lead us to more cases, which in turn lead us to still more cases, and so on. Like the rolling snowball, the snowball sample builds up as we continue to add cases. Because snowball sampling depends on the sampled cases being knowledgeable of other relevant cases, the technique is especially useful for sampling subcultures where the members routinely interact with one another. Snowball sampling also is useful in the investigation of sensitive topics, such as child abuse or drug use, where the perpetrators or the victims might hesitate to identify themselves if approached by a stranger, such as a researcher, but might be open to an approach by someone who they know shares their experience or deviant status (Gelles 1978).

Snowball sampling allows researchers to accomplish what Norman Denzin (1989) calls interactive sampling—that is, sampling people who interact with one another. Probability samples are all non-interactive samples, because knowing someone who is selected for the sample does not change the probability of selection. Interactive sampling often is theoretically relevant, because many social science theories stress the impact of associates on behavior. To study these associational influences, researchers often combine snowball sampling with a probability sample. For example, Albert Reiss and Lewis Rhodes (1967), in a study of associational influences on delinquency, drew a probability sample of 378 boys between the ages of 12 and 16. They then asked each member of this sample to indicate his two best friends. By correlating various characteristics of the juveniles and their friends, the researchers were able to study how friendship patterns affect delinquency.

This interactive element, however, also points to one of the drawbacks of snowball sampling: Although it taps people who are involved in social networks, it misses people who are isolated from such networks. Thus, a snowball sample of drug users is limited to those users who are part of some social network and ignores those who use drugs in an individual and isolated fashion. It is possible that drug users who are involved in a social network differ from isolated users in significant ways. Care must be taken in making generalizations from snowball samples to ensure that we generalize only to those people who are like those in our sample.

Quota Sampling

Quota sampling involves dividing a population into various categories and then setting quotas on the number of elements to select from each category. Once we reach the quota for each category, we put no more elements from that category into the sample. Quota sampling is like stratified sampling in that both divide a population into categories and then take samples from the categories, but quota sampling is a nonprobability technique that often depends on availability to determine precisely which elements will be in the sample. At one time, quota sampling was the method of choice among many professional pollsters. Problems deriving from efforts to predict the very close 1948 presidential election between Harry S. Truman and Thomas E. Dewey, however, caused pollsters to turn away from quota sampling and to embrace the newly developed probability sampling techniques. With its fall from grace among pollsters, quota sampling also declined in popularity among researchers. Presently, use of quota sampling is best restricted to those situations where its advantages clearly outweigh its considerable disadvantages. For example, researchers might use quota sampling to study crowd behavior, for which they cannot establish a sampling frame given the unstable nature of the phenomenon. A researcher who is studying reaction to disasters, such as a flood or a tornado, might use quota sampling where the need for immediate reaction is critical and takes precedence over sample representativeness.

Researchers normally establish quotas for several variables, including such common demographic characteristics as age, sex, race, socioeconomic status, and education. In addition, they commonly include one or more quotas directly related to the research topic. For example, a study of political behavior would probably include a quota on political party affiliation to ensure that the sample mirrored the population on the central variable in the study.

In quota sampling, interviewers do the actual selection of respondents. Armed with the preestablished quotas, interviewers begin interviewing people until they have their quotas on each variable filled. The fact that quota sampling uses interviewers to do the actual selection of cases is one of its major shortcomings. Despite the quotas, considerable bias can enter quota sampling because of interviewer behavior. Some people simply look more approachable than others, and interviewers naturally gravitate toward them. Interviewers also are not stupid. They realize that certain areas of major cities are less-than-safe places to go around asking questions of strangers, and protecting their personal safety by avoiding these areas can introduce obvious bias into the resulting sample.

While there is a risk of bias with quota samples, the technique does have some major positive attributes—namely, it is cheaper and faster than probability sampling. At times, these advantages can be sufficient to make quota sampling a logical choice. For example, if we wanted a rapid assessment of people’s reactions to some event that had just occurred, quota sampling would probably be the best approach.

Purposive Sampling

In the sampling procedures discussed thus far, one major concern has been to select a sample that is representative of—and will enable generalizations to—a larger population. Generalizability, however, is only one goal, albeit an important one, of scientific research. In some studies, the issue of control may take on considerable importance and dictate a slightly different sampling procedure. In some investigations, control takes the form of choosing a sample that specifically excludes certain types of people, because their presence might confuse the research findings. For example, if a researcher were conducting an exploratory study of a psychotherapeutic model of treatment, he or she might wish to choose people for the sample from among “ideal candidates” for psychotherapy. Because psychotherapy is based on talking about oneself and gaining insight into feelings, ideal candidates for psychotherapy are people with good verbal skills and the ability to explore and express their inner feelings. Because well-educated, middle-class people are more likely to have these characteristics, a researcher might choose them for the sample.

This is called purposive sampling, or judgmental sampling: The investigators use their judgment and prior knowledge to choose for the sample people who best serve the purposes of the study. This is not “stacking the deck” in the researcher’s favor, however. Consider the illustration given earlier: The basic research question is whether this type of psychotherapy can work at all. If we select a random sample, we get a variation based on age, sex, education, socioeconomic status, and a host of other variables that are not of direct interest in this study but that might influence receptive-ness to psychotherapy. Certainly, in a truly random sample, the effects of this variation would wash out. The sample, however, would have to be so large that we could not do psychotherapy on that many people. So, rather than use some other sampling technique, we choose a group that is homogeneous in terms of the factors that are likely to influence receptiveness to psychotherapy. This enables us to see whether psychotherapy works better than some other form of therapy. If it does not work with this ideal group, then we can probably forget the idea. If it does work, then we can generalize only to this group, with further research among other groups still required to see how extensively we can generalize the results.

Research in Practice 6.2 illustrates some of the kinds of research problems we can approach with nonprobability samples and how a sampling strategy might actually involve a creative combination of some of the sampling types discussed here.

Dimensional Sampling

It often is expeditious—if not essential—to use small samples. Small samples can be very useful, but we must exercise considerable care in drawing the sample. (The smallest sample size, of course, is the single case, which we will discuss in Chapter 11.) Dimensional sampling is a sampling technique for selecting small samples in a way that enhances their representativeness (Arnold 1970). The two basic steps to dimensional sampling are these: First, specify all the important dimensions or variables. Second, choose a sample that includes at least one case that represents each possible combination of dimensions.

We can illustrate this with a study of the effectiveness of various institutional approaches in the control of juvenile delinquency (Street, Vinter, and Perrow 1966). The population consisted of all institutions for delinquents. To draw a random sample of all those institutions, however, would have made a sample size that would tax the resources of most investigators. As an alternative, the researchers used a dimensional sample. The first step was to spell out the important conceptual dimensions. In terms of juvenile institutions, this investigation considered three dimensions, each containing two values, as illustrated in Table 6.3: organizational goals (custodial or rehabilitative), organizational control (public or private), and organizational size (large or small). In the second step, the researchers selected at least one case to represent each of the eight possibilities that resulted.

Table 6.3 An Illustration of Institutional Dimensions for a Dimensional Sample

|

| Custodial Goals | Rehabilitative Goals | ||

|

| Public | Private | Public | Private |

| Large Size |

|

|

|

|

| Small Size |

|

|

|

|

Dimensional sampling has a number of advantages that make it an attractive alternative in some situations. First, it is faster and less expensive than studying large samples. Second, it is valuable in exploratory studies with little theoretical development to support a large-scale study. Third, dimensional sampling provides more detailed knowledge of each case than we would likely gain from a large sample. With a large sample, data collection necessarily is more cursory and focused (which is justified if previous research has narrowed the focus of what variables are important).

Research in Practice 6.2: Behavior and Social Environments: Using Nonprobability Samples to Study Hidden Populations